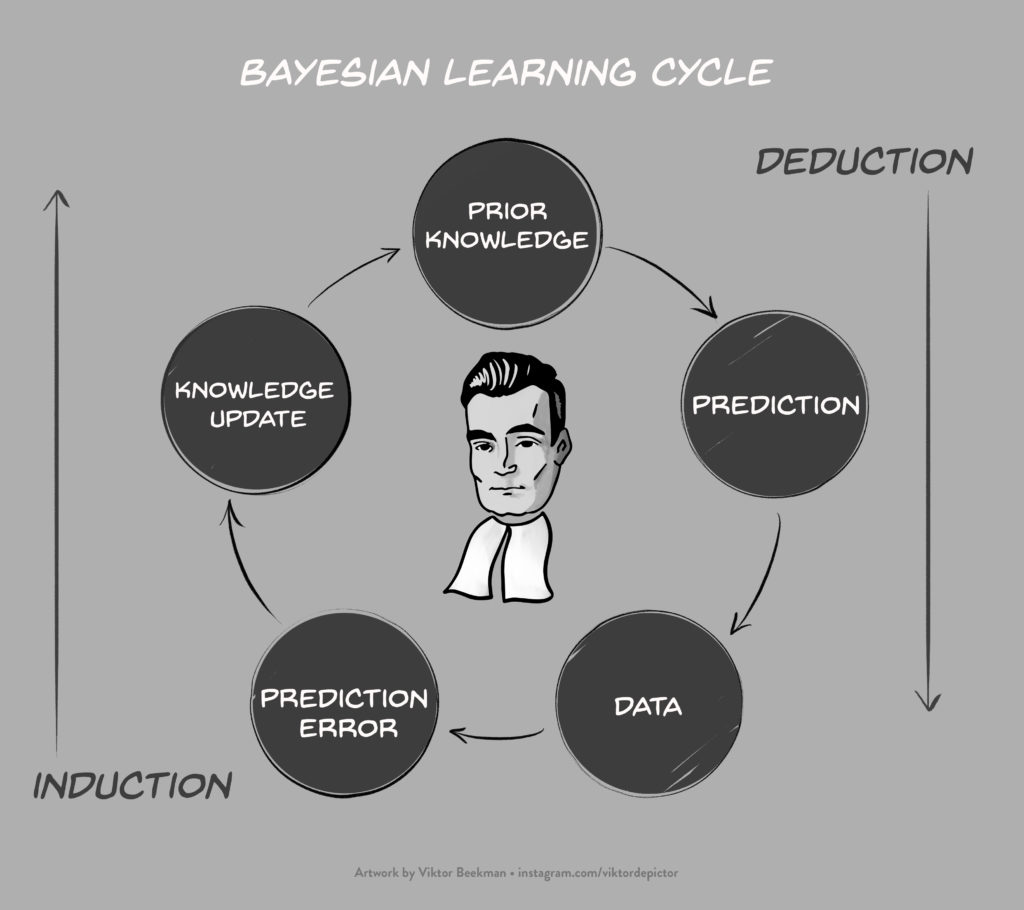

A Bayesian Perspective on the Proposed FDA Guidelines for Adaptive Clinical Trials

The frequentist food and drug administration (FDA) has circulated a draft version of new guidelines for adaptive designs, with the explicit purpose of soliciting comments. The draft is titled “Adaptive designs for clinical trials of drugs and biologics: Guidance for industry” and you can find it here. As summarized on the FDA webpage, this draft document …

read more