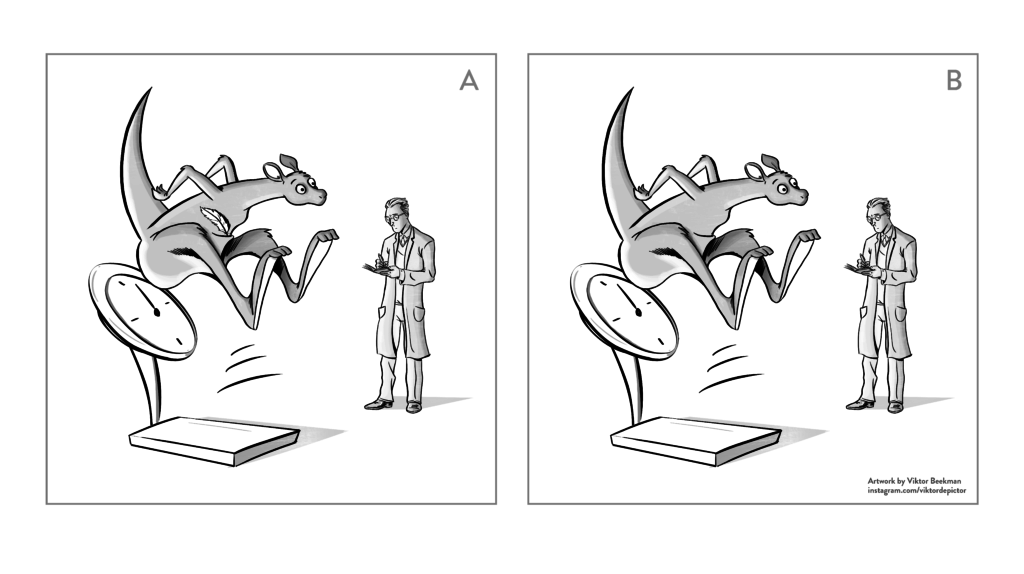

Redefine Statistical Significance Part IX: Gelman and Robert Join the Fray, But Are Quickly Chased by Two Kangaroos

Andrew Gelman and Christian Robert are two of the most opinionated and influential statisticians in the world today. Fear and anguish strike into the heart of the luckless researchers who find the fruits of their labor discussed on the pages of the duo’s blogs: how many fatal mistakes will be uncovered, how many flawed arguments will be exposed? Personally, we…

read more