The paper Redefine Statistical Significance reveals an inconvenient truth: p-values near .05 are evidentially weak. Such p-values should not be used “for sanctification, for the preservation of conclusions from all criticism, for the granting of an imprimatur.” (Tukey, 1962, p. 13 — NB: Tukey was referring to statistical procedures in general, not to p-values or p-just-below-.05 results specifically).

Unfortunately, in the current academic environment, a p<.05 result is meant to accomplish exactly this: sanctification. After all, as a field, we have agreed that p-values below .05 are “significant”, and that in such cases “the null hypothesis can be rejected”. How rude then, how inappropriate, that some critics still wish to dispute the findings! Do they think that they are above the law?

One of us (EJ) is reminded of a student who had conducted an experiment on precognition — just before picking up the phone, participants had to guess who was on the other end of the line. Identification accuracy was significantly above chance. When I told the student that I still didn’t buy it, she responded with indignation. The p<.05 law is the p<.05 law, after all.

There will be people who argue that a p-just-below-.05 result is generally discussed with the appropriate caution and modesty. To these people, we respectfully say: “what on earth have you been smoking?” Or, more politely, we might say: “kindly peruse the literature and tally the cases where a p-just-below-.05 result is discussed with caution and modesty”. The problem is exacerbated by the habit to report results as “p<.05”, a habit that becomes more attractive when the obtained p-values are close to .05. Who is to blame for this sad state of affairs? Perhaps researchers, like other human beings, are allergic to uncertainty; perhaps researchers are uncomfortable with statistics and prefer to rely on simple rules; perhaps researchers act this way to survive in a highly competitive academic climate. Regardless, there is an implicit social contract that p-just-below-.05 results ought not to be questioned. This harms science.

The strength of the argument in Redefine Statistical Significance is that it emphatically does not discuss plausible Bayes factors. Crucially, the paper discusses upper bounds on Bayes factors. The upper bounds are generally not plausible and can be obtained only by the most mischievous, ill-natured, and obvious forms of statistical cheating. You can bend those parameter priors like Beckham, but no Bayesian analysis will provide convincing evidence against the null hypothesis for a p-just-below-.05 result. What this means is that the null hypothesis is not outpredicted by the alternative hypothesis –regardless of the specific parameter priors with which H1 is adorned– and in such cases it is imprudent to issue the all-or-none decision “reject the null hypothesis”.

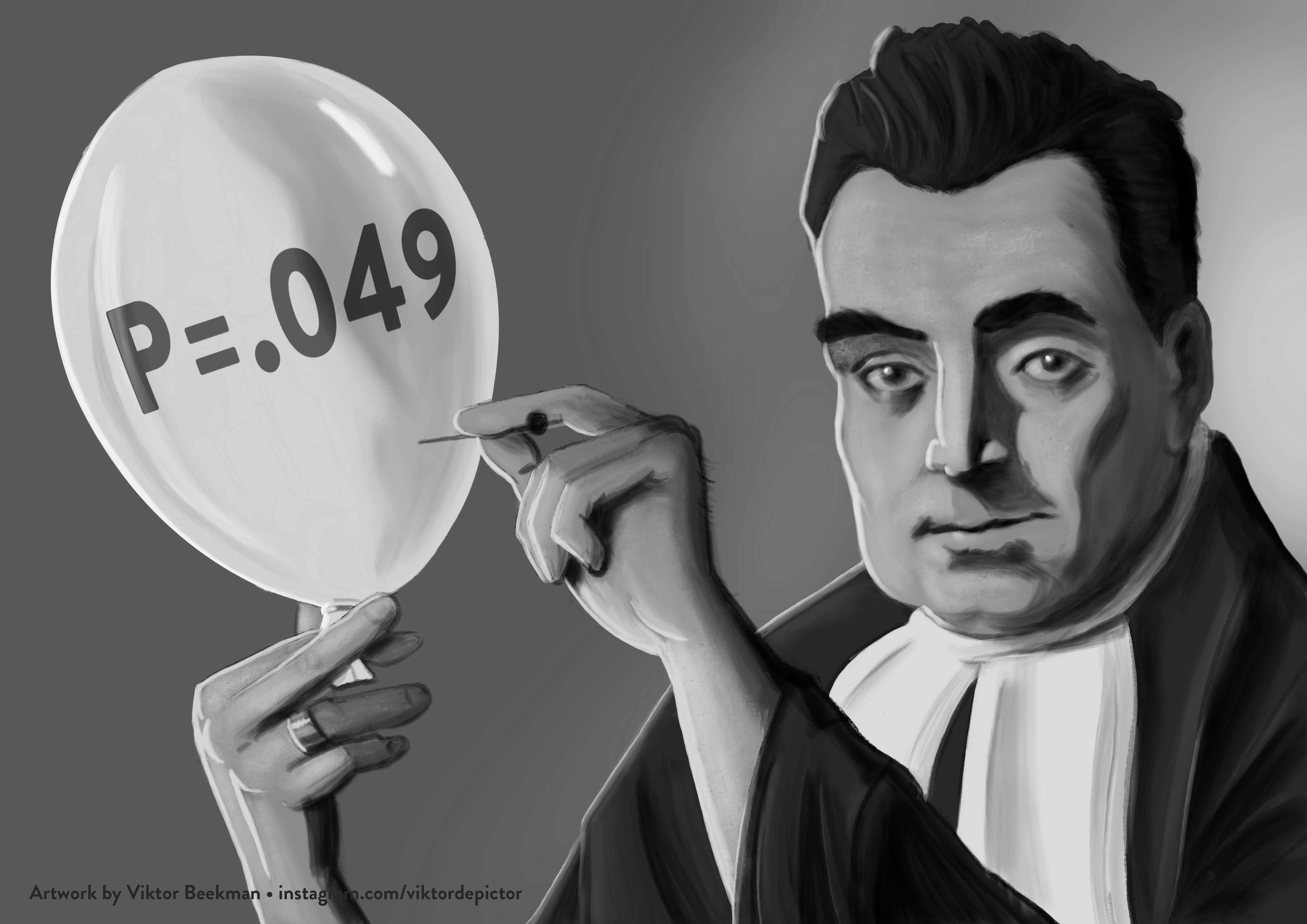

The root of the p-value problem is that it considers only what can be expected under the null hypothesis. Data (or more extreme cases) may be unlikely under H0, but what if these data happen to be just as unlikely under H1? Any analysis that sweeps this under the rug is incomplete at best. The root of the problem, therefore, is not necessarily a Bayesian one, the picture of Thomas Bayes bursting the p=.049 balloon notwithstanding. Within the p-value paradigm, one might ask “How diagnostic is p=.049? How much more likely is p=.049 to occur under H1 than under H0?” The answer to this question is consistent with the Bayesian analyses discussed in the previous blogposts. A cartoon that explains the diagnosticity of the p-value is available on the JASP blog.

In sum, p-just-below-.05 results are evidentially weak. We understand that some find this message unwelcome. And we agree — it would be much nicer if these kinds of p-values gave compelling evidence against the null. Unfortunately this is simply not reality. Perhaps you find yourself like Neo, freshly extracted from the Matrix. Overlooking the earthly devastation he was previously unaware of, going back to a state of blissful ignorance is not an option.

Like this post?

Subscribe to the JASP newsletter to receive regular updates about JASP including the latest Bayesian Spectacles blog posts! You can unsubscribe at any time.

References

Tukey, J. W. (1962). The future of data analysis. The Annals of Mathematical Statistics, 33, 1-67.

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.

Quentin Gronau

Quentin is a PhD candidate at the Psychological Methods Group of the University of Amsterdam.