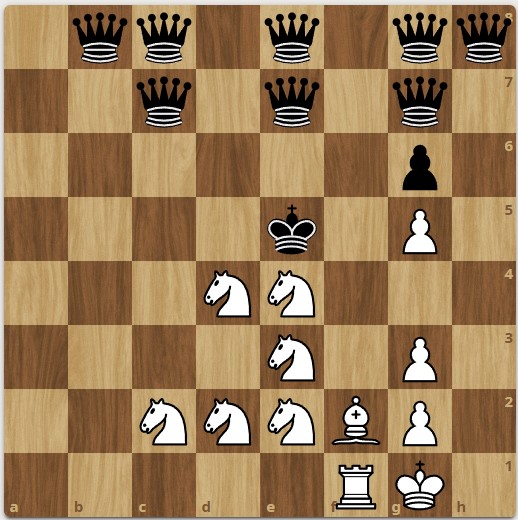

Two Grotesque-esque Chess Problems

WARNING: This post is about chess. If you don’t play chess you might want to skip this post. During a week-long family vacation I engaged obsessively in both the highest and the lowest form of human intellectual activity. Obviously the highest form is chess endgame study composition; the lowest form surely is online “bullet” chess. Miraculously, my bullet chess adventures…

read more