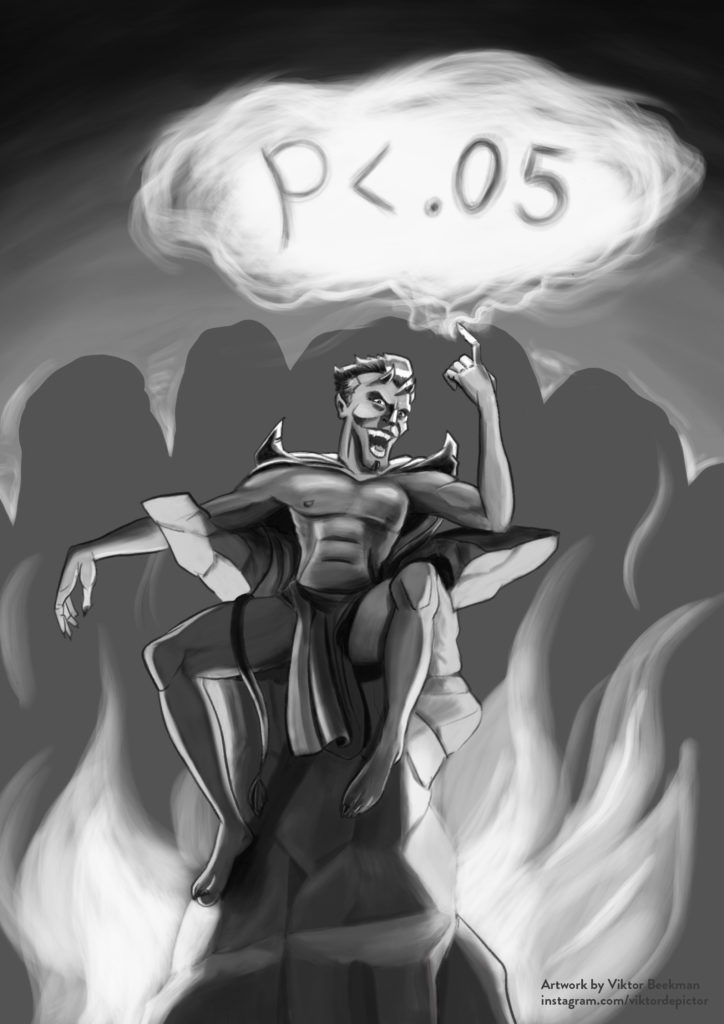

Redefine Statistical Significance Part IV: A Second Demonstration

In the two previous posts on the paper “Redefine Statistical Significance”, we reanalyzed Experiment 1 from “Red, Rank, and Romance in Women Viewing Men” (Elliot et al., 2010). Female undergrads rated the attractiveness of a single male from a black-and-white photo. Ten women saw the photo on a red background, and eleven saw the photo on a white background. The…

read more