Statisticians have worried about the evidential impact of p-values for over 60 years. Again and again, they reported that p-values slightly lower than .05 provide only weak evidence against the null hypothesis (e.g., Edwards, Lindman, & Savage, 1963; Berger & Delampady, 1987; Sellke, Bayarri, & Berger, 2001; Johnson, 2013). Last year, the American Statistical Association (ASA) issued a statementon p-values that confirmed their claim: “a p-value near 0.05 taken by itself offers only weak evidence against the null hypothesis” (Wasserstein and Lazar, 2016, p. 132).

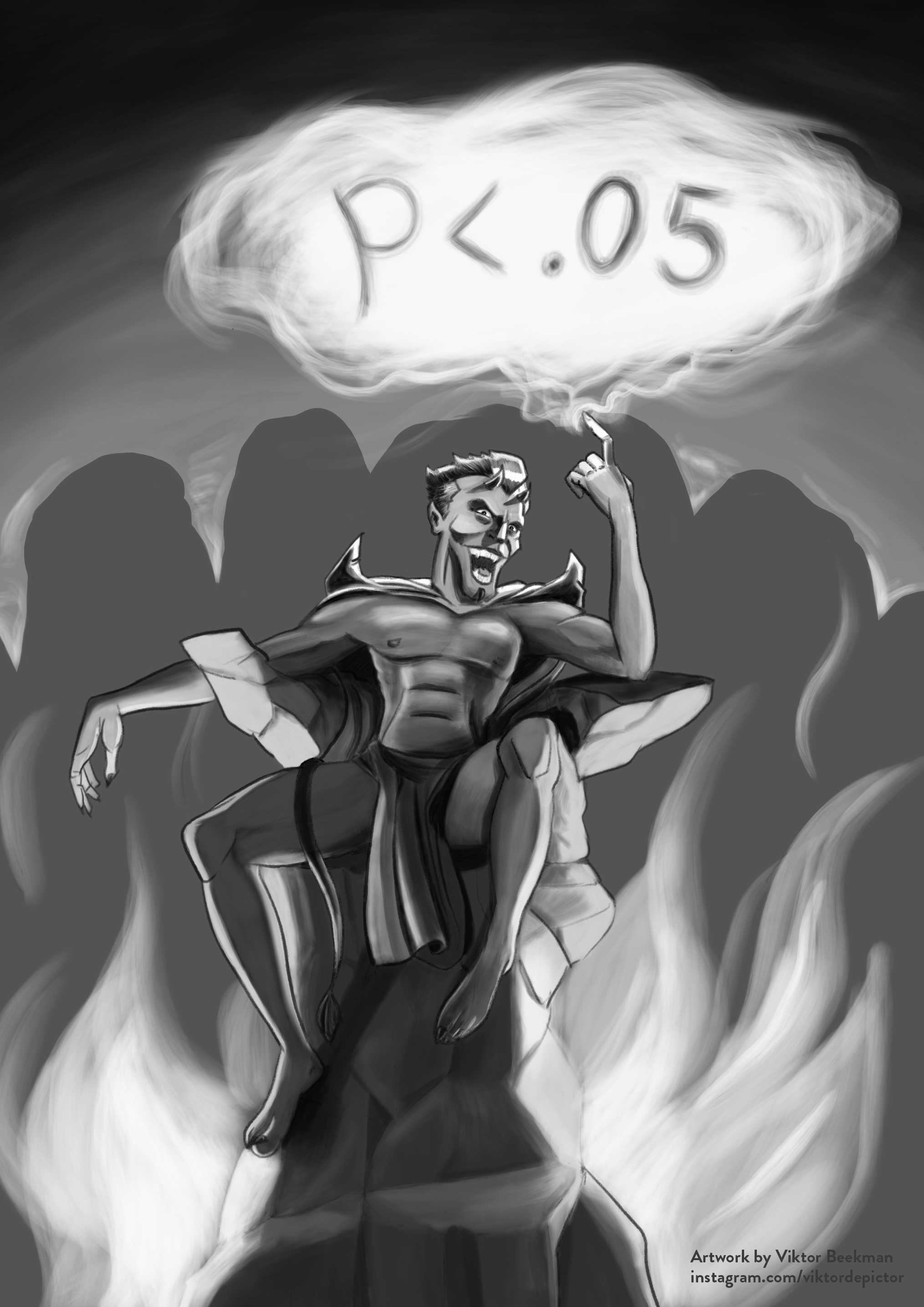

This is a BIG deal. Most empirical fields routinely apply a threshold level of .05 as the standard cutoff for asserting the presence of an effect. If p-values near .05 are evidentially weak, this means that it is easy for spurious, unreplicable results to implant themselves in the scientific literature. These spurious findings then become “intellectual landmines” (Harris, 2017) that frustrate the further advancement of science.

Despite the statisticians’ lament, practitioners have managed to shrug off their warnings, by and large retaining the threshold level of .05. A cynic may believe that the .05 level has remained popular mostly because of considerations related to career advancement rather than considerations of statistical hygiene. Stats god Dennis Lindley (1986, p. 502) once said: “Perhaps this is why significance tests are so popular with scientists: they make effects appear so easily.”

How to Wake A Sleep Troll if You Must

The primary aspiration of Jalto the Sleep Troll is to continue sleeping. And as far as Jalto is concerned, this is for good reason: his bed is warm and his dreams are pleasant. What more could a Sleep Troll wish for? But hidden in every Sleep Troll’s bedroom are hundreds of alarm clocks: some digital, some analogue, but all of them loud. Invariably, every few years one of the alarm clocks goes off, and with such explosive intensity that the house starts to shake, wildlife from miles away flees the area, and passing birds drop dead from the sky. And just as invariably, Jalto grabs his bludgeon, pulverizes the offending alarm clock, and goes back to sleep. After more than 60 years of Jalto sleeping, his loving wife Zulia has had quite enough. She walks in and pours a bucket of ice water straight between Jalto’s legs. Jalto jerks awake, screams in anger and, arms flailing, he reaches for his bludgeon. But Zulia is prepared; she swings the bucket and hits Jalto so hard that he is launched from his bed, into the air, straight through the bedroom window, and into the garden pond, where he is immediately attacked by a school of piranhas. Next to the pond, there is a stage with Cradle of Filth performing live. Out of the seven documented methods to wake a Sleep Troll and survive the experience, this procedure is by far the most gentle.

In this metaphor, the alarm clocks represent the articles that statisticians have written about p-values near .05. The ice bucket is the recent paper by Dan Benjamin and 71 co-authors, “Redefine statistical significance” (in press for Nature Human Behaviour). The reason why this paper is so effective is clear from the one-sentence summary: “We propose to change the default P-value threshold for statistical significance for claims of new discoveries from 0.05 to 0.005.” In contrast to the earlier statistical work, this lays out a concrete, actionable change. Journal editors and funding institutions could easily enforce the proposal; in other words, for many empirical researchers, this is the point where excrements get real.

For those who bother to read the short “ice bucket” paper, the main proposal is actually rather modest. For the discovery of new effects (!), the authors urge caution in the face of p-values in the range from .005 to .05. In this grey zone, p-values should be called “suggestive”, whereas the word “significant” is reserved for p-values lower than .005. This should not be controversial, and it can be considered a simple rephrasing of the ASA statement calling p-values near .05 “weak evidence”. Moreover, the recommendation is supported by several statistical arguments that demonstrate, for instance, that when p=.05, the observed data are no more than about 2.5 to 3.4 times more likely under a plausible H1 than under H0.

Nevertheless, opinions about the proposal are divided; to illustrate, a Nature poll on Twitter (“Should the P-value thresholds be lowered? Some leading researchers say it should face tougher standards”) produced an almost perfect split – 562 people said “yes” while 540 said “no”.

A School of Red Herrings

In this first post about the ice bucket paper, I would like to deal with a number of counter-arguments that I consider to be red herrings. By eliminating these from consideration we can then focus on the key point. Disclaimer: several red herrings are already explicitly discussed in the ice bucket paper itself. However, this has not stopped people on social media from bringing them up.

Red Herring #1: There are other problems

Yes there are. Significance seeking, fudging, publication bias, hindsight bias, too much emphasis on testing, world hunger, breast cancer, evil dictatorships: all of these are important problems that need to be addressed in various ways. But the current paper addresses a different problem.

Red Herring #2: This is not really an important problem

Yes it is. Routinely making strong claims from weak evidence will inevitably pollute the literature; moreover, the reality of publication bias ensures that the clean-up effort will be relatively ineffective.

Red Herring #3: But what about an increase in Type-II error rates?

This comment is motivated by an all-or-none view of statistics, where every data set has to lead to a discrete decision: retain or reject the null hypothesis. But we should not feel forced to make such discrete decisions, especially when the evidence is inconclusive. The Type-II error rate perspective considers average performance across all possible data sets; at issue here is the evidential value of a specific subset, for which p falls in the ambiguous zone. And when p is in that zone, the evidence is weak, and we should not feel confident in making strong claims.

Red Herring #4: The new threshold benefits labs that are rich and fields that can collect data cheaply; it also provides the wrong incentives by preventing researchers from studying small effects

This is not evident. First, the increase in sample size is not as prohibitive as one may think (the ice bucket paper mentions an increase of about 70%). Second, multiple studies can be combined to produce evidence that is more compelling than each study in isolation. Third, as stated in the paper:

“We emphasize that this proposal is about standards of evidence, not standards for policy action nor standards for publication. Results that do not reach the threshold for statistical significance (whatever it is) can still be important and merit publication in leading journals if they address important research questions with rigorous methods. This proposal should not be used to reject publications of novel findings with 0.005 < P < 0.05 properly labeled as suggestive evidence."

Most importantly, twisting the truth –here: pretending that the evidence is stronger than it really is– is never an acceptable solution to a scientific problem.

Red Herring #5: P-values near .05 are strong evidence as long as I have a good theory

No. A plausible theory may increase the prior odds on the alternative hypothesis, but the Bayes factor quantifies the change in belief, not the starting point or the current state of belief.

Red Herring #6: Weak evidence is still evidence and warrants publication if the topic is sufficiently important

Yes. And as mentioned above, the paper recommends that weak evidence –if labeled as such– warrants publication. But the practical importance of a finding affects utility, not evidence.

Red Herring #7: This will excerbate publication bias by increasing the file drawer

Not necessarily. First, there are initiatives to promote publishing results regardless of the outcome, for instance through Chris Chambers’ Registered Reports. Second, the paper explicitly states that the proprosal “is about standards of evidence, not standards for policy actionnor standards for publication.” Third, the additional results that now supposedly start entering the file drawer would otherwise have been confidently presented as demonstrations of an effect. Fourth, it is hard to believe that a p=.015 result will end up in the file drawer instead of being presented as “suggestive evidence”.

Red Herring #8: P-values should be abandoned altogether

Personally, I agree and consider them the work of the devil. But armies of statisticians have come and gone, producing hundreds of papers making this point, and not much has changed. The message is too easily cast aside. For the next few years, the world is going to stay married to the p-value. In the meantime, we need to be realistic and improve the situation as best we can. The status quo is to claim an effect whenever p<.05; the proposal is to move this to p<.005, and recognize the grey zone for what it is.

Red Herring #9: We should abandon testing and just estimate parameters

Personally, I agree that testing is used in situations where estimation would be more appropriate. However, testing and estimation ultimately serve different goals and each can be useful in the right context. In my own experience, the statistical questions that experimental psychologists wrestle with are related more to testing than to estimation. Regardless, the reality is that testing point null hypotheses is what researcher do, and what they will keep doing for the foreseeable future.

The Key Point

The key point, one that surprisingly few commenters have addressed, is that p-values near .05 are only weak evidence against the null hypothesis. P-values in the range from .005 to .05 (and especially those near .05) deserve skepticism, curiosity, and modest optimism, but not unadulterated adulation. In the next post, I will discuss concrete examples to clarify how weak a p-value near .05 really is.

Like this post?

Subscribe to the JASP newsletter to receive regular updates about JASP including the latest Bayesian Spectacles blog posts! You can unsubscribe at any time.

References

Berger, J. O., & Delampady, M. (1987). Testing precise hypotheses. Statistical Science, 2, 317-352.

Harris, R. (2017). Rigor mortis: How sloppy science creates worthless cures, crushes hope, and wastes billions. New York: Basic Books.

Edwards, W., Lindman, H., & Savage, L. J. (1963). Bayesian statistical inference for psychological research. Psychological Review, 70, 193-242.

Johnson, V. E. (2013). Revised standards for statistical evidence. Proceedings of the National Academy of Sciences of the United States of America, 110, 19313-19317.

Lindley, D. V. (1986). Comment on “tests of significance in theory and practice” by D. J. Johnstone. Journal of the Royal Statistical Society, Series D (The Statistician), 35, 502-504.

Sellke, T., Bayarri, M. J., Berger, J. O. (2001). Calibration of p values for testing precise null hypotheses. The American Statistician, 55, 62-71.

Wasserstein, R. L., & Lazar, N. A. (2016). The ASA’s Statement on p-values: Context,process, and purpose. The American Statistician, 70, 129-133.

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.