The subtitle says it all: “Understanding statistics and probability with Star Wars, Lego, and rubber ducks”. And the author, Will Kurt, does not disappoint: the writing is no-nonsense, the content is understandable, the examples are engaging, and the Bayesian concepts are explained clearly. Here are some of the book’s features that I particularly enjoyed:

- Attention to the fundamentals. Without getting bogged down in axioms and theorems, Kurt stresses that “Probability is a measurement of how strongly we believe things about the world” (p. 14; note that this interpretation also holds for the likelihood function). Kurt outlines the laws of probability theory and discusses how Bayesian reasoning is an extension of pure logic to continuous-valued degrees of conviction.

- A focus on the simplest model. If you are looking for a Bayesian generalized linear mixed model, you won’t find it here. Throughout, Kurt sticks mostly to the binomial distribution and conjugate beta priors. This is a great choice, as the purpose of this book is to get across the key Bayesian concepts.

- Discussion of both parameter estimation and hypothesis testing. There are precious few introductory books on Bayesian inference (few that are really introductory anyway), but those that exist usually shy away from hypothesis testing. I have always found this strange, because, as Kurt demonstrates, both hypothesis testing and parameter estimation follow from exactly the same updating mechanism, namely Bayes’ rule (see also this post and also Gronau & Wagenmakers, 2019).

- R code, but sparingly. Throughout the book, Kurt uses snippets of R code to make certain concepts more concrete. The best thing about this is that he does not overdo it. An appendix provides a quick introduction to R.

In my opinion, there are also some opportunities for further improvement:

- The combinatorics of the binomial coefficient are not given an intuitive explanation. Yet, once you know the intuition, it is easy to reconstruct the coefficient on the fly instead of having to memorize it.

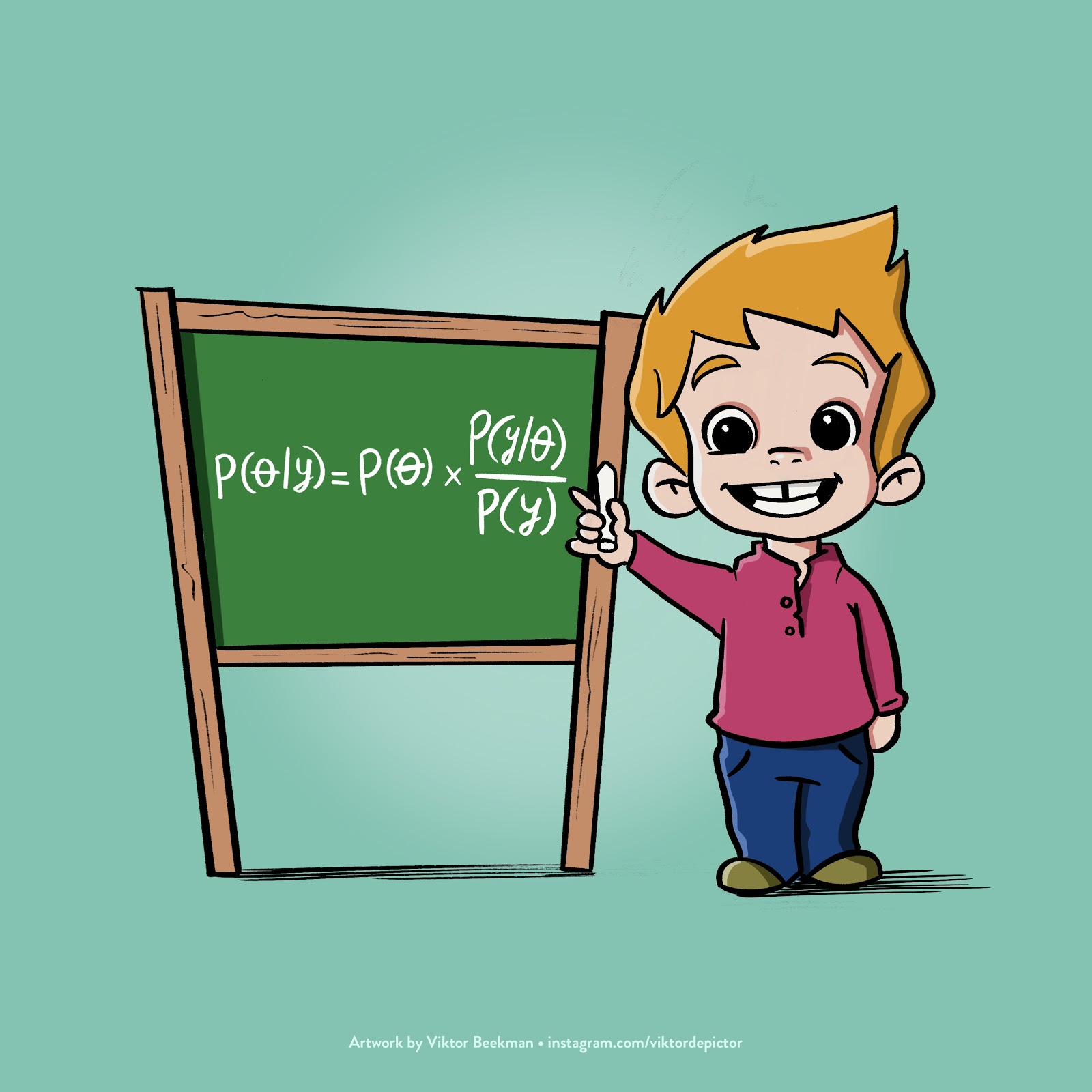

- When Bayes’ rule is introduced, I prefer its predictive form, as shown here:

The predictive form clarifies that new knowledge (the posterior, on the left-hand side) arises from updating old knowledge (the prior, first factor on the right-hand side) with the evidence that is coming from the data, quantified as relative predictive performance (see also Rouder & Morey, 2019). - The first chapter in the part “Hypothesis testing: The heart of statistics” (bonus points for the title!) deals with a Bayesian A/B test. Kurt explains:

“In this chapter, we’re going to build our first hypothesis test, an A/B test. Companies often use A/B tests to try out product web pages, emails, and other marketing materials to determine which will work best for customers. In this chapter, we’ll test our belief that removing an image from an email will increase the click-through rate against the belief that removing it will hurt the click-through rate.”

But this is a question of estimation, not of hypothesis testing. As conceptualized by Harold Jeffreys, a problem of hypothesis testing involves the tenability of a single specific parameter value. In most A/B tests, the question of interest is not whether a change will help or hurt, but whether it will help or be ineffective. The hypothesis that the change is ineffective is instantiated by a prior spike at zero. Note that a Bayesian A/B hypothesis test was recently added to JASP (https://jasp-stats.org/2020/04/28/bayesian-reanalyses-of-clinical-a-b-trials-with-jasp-the-heatmap-robustness-check/; see also Gronau, Raj, & Wagenmakers, 2019).

- A minor quibble is the interpretation of the Bayes factor in chapter 16:

“The Bayes factor is a formula that tests the plausibility of one hypothesis by comparing it to another. The result tells us how many times more likely one hypothesis is than another.”

What is described here is the posterior odds (i.e., belief), not the Bayes factor (i.e., evidence; for details see this post). This is just a slip of the pen, however, since the subsequent text demonstrates that Kurt knows what he’s talking about.

Wrapping Up

This book radiates enthusiasm. This is another sense in which the author successfully presents an ultralite version of Jaynes’ work “Probability theory: The logic of science”. The best way to convey the book’s contents and the author’s enthusiasm is to present the final paragraph, “wrapping up”:

“Now that you’ve finished your journey into Bayesian statistics, you can appreciate the true beauty of what you’ve been learning. From the basic rules of probability, we can derive Bayes’ theorem, which lets us convert evidence into a statement expressing the strength of our beliefs. From Bayes’ theorem, we can derive the Bayes factor, a tool for comparing how well two hypotheses explain the data we’ve observed. By iterating through possible hypotheses and normalizing the results, we can use the Bayes factor to create a parameter estimate for an unknown value. This, in turn, allows us to perform countless other hypothesis tests by comparing our estimates. And all we need to do to unlock all this power is use the basic rules of probability to define out likelihood, P(D|H)!”

Conclusion

As a first introduction to Bayesian inference, this book is hard to beat. It nails the key concepts in a compelling and instructive fashion. I give it full marks: five out of five stars. Perhaps a future edition will make use of a new JASP module that we currently have under development (no spoilers!).

Want to Know More?

An interview with Will Kurt is here.

Another review of “Bayesian statistics the fun way” is here.

Will Kurt’s blog, “Count Bayesie”, is here.

References

Gronau, Q. F., Raj A., & Wagenmakers, E.-J. (2019). Informed Bayesian inference for the A/B test. Manuscript submitted for publication.

Gronau, Q. F., & Wagenmakers, E.-J. (2019). Rejoinder: More limitations of Bayesian leave-one-out cross-validation. Computational Brain & Behavior, 2, 35-47.

Jaynes, E. T. (2003). Probability theory: The logic of science. Cambridge: Cambridge University Press.

Kurt, W. (2019). Bayesian statistics the fun way. San Francisco: No Starch Press.

Perezgonzalez, J. D. (2020). Bayesian benefits for the pragmatic researcher. Current Directions in Psychological Science, 25, 169-176.

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.