The Misconception

Posterior Bayes factors are a good idea: they provide a measure of evidence but are relatively unaffected by the shape of the prior distribution.

The Correction

Posterior Bayes factors use the data twice, effectively biasing the outcome in favor of the more complex model.

The Explanation

The standard Bayes factor is the ratio of predictive performance between two rival models. For each model , its predictive performance

is computed as the likelihood for the observed data, averaged over the prior distribution for the model parameters

. Suppressing the dependence on the model, this yields

. Note that, as the words imply, “predictions” are generated from the “prior”. The consequence, of course, is that the shape of the prior distribution influences the predictions, and thereby the Bayes factor. Some consider this prior dependence to be a severe limitation; indeed, it would be more convenient if the observed data could be used to assist the models in making predictions — after all, it is easier to make “predictions” about the past than about the future.

The wish to produce predictions without opening oneself up to the unwanted impact of the prior distribution has spawned an alternative to the standard Bayes factor, one in which predictions are obtained not from the prior distribution, but from the posterior distribution. This “postdictive” performance is then given by averaging the likelihood over the posterior, yielding . This procedure was proposed by Aitkin (1991). Several discussants of the Aitkin paper pointed out that the method uses the data twice, inviting inferential absurdities. To see the double use of the data, note that the posterior distribution

is proportional to

, such that the likelihood

enters the formula twice.

As the rebuttal shows, Aitkin was not impressed, neither by the general argument nor by the concrete examples. Here we provide another example of posterior Bayes factors in action. Consider a binomial problem where indicates a general law (“all apples grow on apple trees”; “all electrically neutral atoms have an equal number of protons and electrons”; “all zombies are hungry”; “all ravens are black”; “all even integers greater than two are the sum of two prime numbers”). Hence,

. Our alternative model relaxes the restriction that

and assigns it a prior distribution; for mathematical convenience, here we use a uniform prior from 0 to 1, that is,

. Furthermore, assume we only encounter confirmatory instances, that is, observations consistent with

. In this situation, every confirmatory instance ought to increase our confidence in the general law

, something that Polya (1954, p.4) called “the fundamental inductive pattern”. As we will demonstrate below, posterior Bayes factors violate the fundamental pattern (for a discussion on how Bayesian leave-one-out cross-validation or LOO also violates the fundamental pattern see Gronau & Wagenmakers, in press a, b).

Consider first the general expression for the Bayes factor in favor of over

:

where is the number of successes,

, the number of failures,

the number of attempts, and

the gamma function, which for integers reduces to the factorial function:

We then simplify this equation by inserting the following information: (1) (from the definition of

as a general law; (2)

, so

, because only successes are observed. This leaves:

If we assume that (the uniform prior for

under

) we obtain

: that is, every time a confirmatory instance is observed, the Bayes factor in favor of the general law increases by one. Thus, the standard Bayes factor generates the fundamental inductive pattern. In order to examine what the posterior Bayes factor produces, note that a

prior distribution combined with

successes yields a

posterior distribution. This means that, under the starting assumption that

we can use the general equation by plugging in the posterior

instead of the prior

. Doing so yields

.

Table 1 below shows the results for various numbers of confirmatory instances . It is clear that the evidence in favor of the general law remains weak no matter how many confirmatory instances are observed. Indeed, an infinite number of confirmatory instances results in a Bayes factor of only 2;1 if

and

are deemed equally plausible a priori, an infinite number of observations perfectly predicted by

manages to raise the probability of the general law from

to an unimpressive

. Not only this; the increase in confidence from observing a meagre 5 confirmatory instances to observing an infinite number only brings the posterior Bayes factor from about 1.83 to 2; under equal prior odds, this means that an infinite amount of confirmatory information from n=6 onwards takes the posterior probability for the general law from

to

, for a miniscule 2% increase.

| No. confirmations |

|

| 1 | |

| 2 | |

| 3 | |

| 4 | |

| 5 | |

| 2 |

Table 1. As the number of confirmatory instances increases, the posterior Bayes factor

provides weak and bounded support for the binomial general law

over the alternative model

.

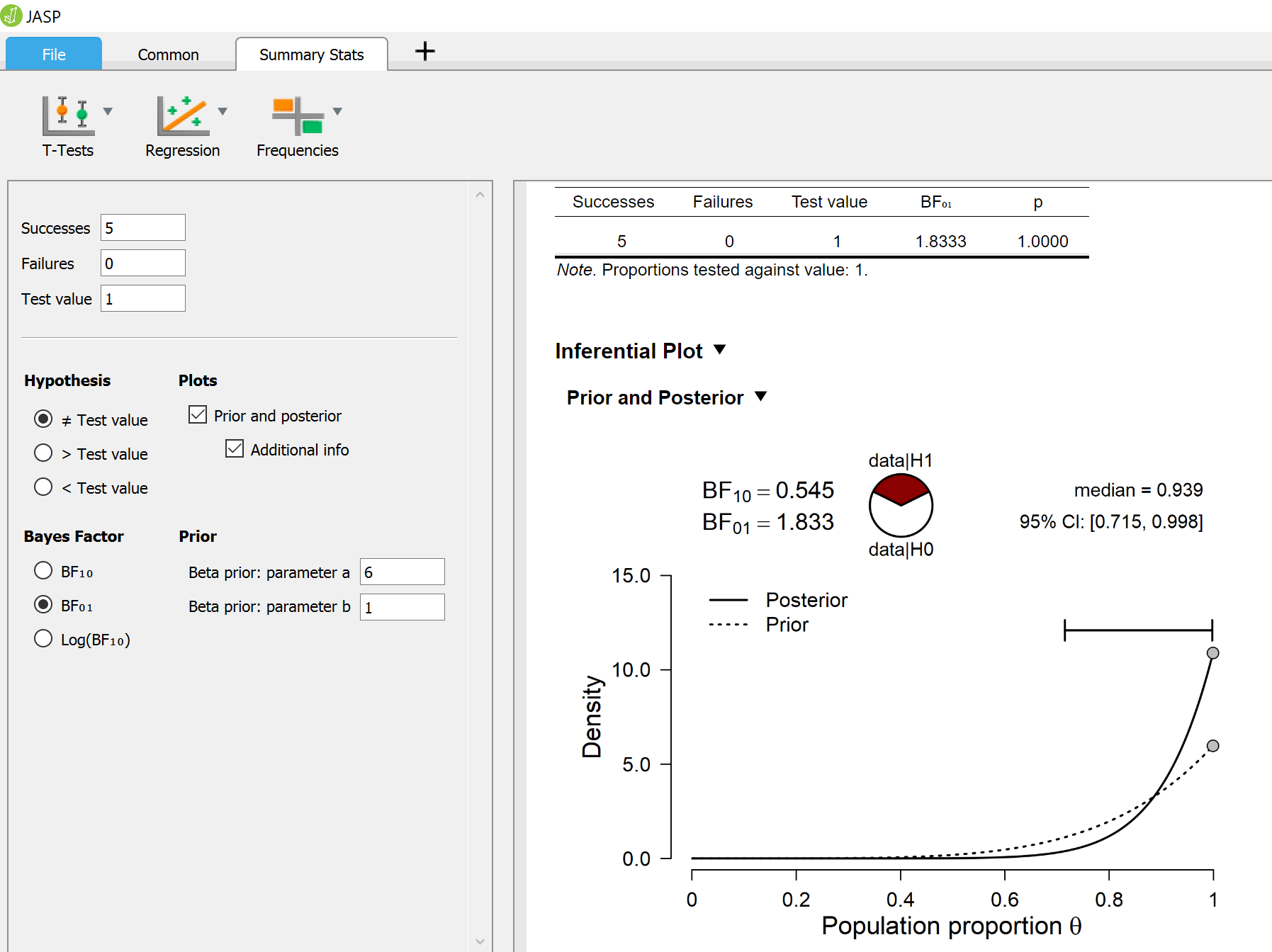

The result from Table 1 can be easily confirmed using the SumStat module in JASP (Ly et al., 2018 , failures

, the test value is

, and the prior distribution is set to

,

. The Bayes factor in favor of the general law

equals

.

Concluding Comments

As demonstrated above, posterior Bayes factors violate the fundamental inductive pattern. No matter how many apples one sees that grow from apple trees, no matter how many even integers greater than two are inspected that turn out to be the sum of two prime numbers, our confidence in these general laws would be bounded, and would forever remain caught in the evidential region that Jeffreys (1961, appendix B) called “not worth more than a bare mention”. This is preposterous.

The reason for such problematic behavior is the same as for LOO (Gronau et al., in press a, b): when two models are provided advance access to the data they are supposed to predict, this benefits the complex model much more than the simple model. If the data are consistent with the simple model, the complex model will abuse the advance access to the data to tune its parameters so that it effectively mimics the simple model; the comparison is then not between a complex model and a simple model, but between two simple and highly similar models — such a comparison cannot produce a diagnostic result.

In sum, we believe that models, hypotheses, and theories need to be assessed by the predictions that they make. Predictions, as the word suggests, need to come from the prior, not from the posterior. The famous Danish maxim tells us that “prediction is hard, particularly about the future”; the difficulty is real, but this does not license us to abandon the enterprise and start assessing our theories by postdiction instead.

Footnotes

1 For a general beta prior, the limit of the posterior Bayes factor is

. See appendix for a derivation.

References

Aitkin, M. (1991). Posterior Bayes factors. Journal of the Royal Statistical Society. Series B (Methodological), 53, 111-142.

Gronau, Q. F., & Wagenmakers, E.-J. (in press a). Limitations of Bayesian leave-one-out cross-validation for model selection. Computational Brain & Behavior. Preprint.

Gronau, Q. F., & Wagenmakers, E.-J. (in press b). Rejoinder: More limitations of Bayesian leave-one-out cross-validation. Computational Brain & Behavior. Preprint.

Jeffreys, H. (1961). Theory of probability (3rd ed.). Oxford: Oxford University Press.

Ly, A., Raj, A., Etz, A., Marsman, M., Gronau, Q. F., & Wagenmakers, E.-J. (2018). Bayesian reanalyses from summary statistics: A guide for academic consumers. Advances in Methods and Practices in Psychological Science, 3, 367-374. Preprint; open access available through the journal website.

Polya, G. (1954). Mathematics and plausible reasoning: Vol. II. Patterns of plausible inference. Princeton, NJ: Princeton University Press.

Wendel, J. (1948). Note on the gamma function. The American Mathematical Monthly, 55, 563-564.

Appendix

Let denote the success probability of a binomial distribution and suppose that the

observations are all successes. The posterior Bayes factor (Aitkin, 1991) for comparing the general law hypothesis

to the alternative

is given by:

The limit of the posterior Bayes factor as goes to infinity is given by:

where we used the well-known result that , for real

and

(Wendel, 1948).

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.

Quentin Gronau

Quentin is a PhD candidate at the Psychological Methods Group of the University of Amsterdam.