Written by Annie “The Duchess of Poker” Duke, Thinking in Bets is a national bestseller, and for good reason. The writing style is direct and to-the-point, and the advice is motivated by concrete examples taken from the author’s own experience. For instance, one anecdote concerns a bet among a group of friends on whether or not one of them, “Ira the Whale”, could eat 100 White Castle burgers in a single sitting. David Grey, one of the author’s friends, bet $200 on the Whale:

“When they got to White Castle, Ira the Whale decided to order the burgers twenty at a time. David knew he was a lock to win as soon as Ira the Whale ordered the first twenty, because Ira the Whale also ordered a milkshake and fries.

After finishing the 100 burgers and after he and David collected their bets, Ira the Whale ordered another twenty burgers to go, “for Mrs. Whale.” (Duke, 2018, p. 135)

In general, I fully agree with the book’s advice, and some of the exercises in re-thinking that the author suggests are original and worthwhile. Here are some of the book’s main claims:

- Every decision is a bet.

- We bet on our beliefs.

- What makes a decision good or bad is determined by the process, NOT by the final outcome.

- By articulating uncertainty as a bet we avoid black-and-white thinking, we become accountable for our beliefs, and it becomes easier to adjust our opinion.

- By embracing uncertainty we can learn more effectively and hence formulate more accurate beliefs that allow improved bets in the future.

- People are exceptionally poor at updating their beliefs, particularly because of hindsight bias and self-serving bias (and a host of other biases). It takes conscious effort to overcome these biases, but it’s worth it.

- Our decision making is improved when we expose ourselves to a diversity of viewpoints rather than dwell in our own echo-chambers.

- Better decisions can be made when we imagine different future scenarios, their plausibilities, and their utilities.

Overall, this book sparks joy, and I’m giving it four out of five stars. I am withholding the remaining star because, unwittingly, the book also presents some mental traps that can easily ensnare an innocent reader. Below is a list of concerns.

Trap 1: Key Literature is Missing

It is unreasonable to demand that a book, intended as a national bestseller, features a comprehensive literature review. Indeed, if this book would have come with a comprehensive literature review, it would not have been a national bestseller. However, it does grate that no reference is made to the work by Lindley (1985, 2006), especially since Lindley emphasizes several of the book’s main claims. For instance, in his 1985 book “Making Decisions”, Lindley states that “life is a gamble” (p. 61), and on p. 19 he writes:

“We are all faced with uncertain events like ‘rain tomorrow’ and have to act in the reality of that uncertainty—shall we arrange for a picnic? We do not ordinarily refer to these as gambles but what word can we use? In this sense all of us ‘gamble’ every day of our lives”

Earlier, the same point was stressed by another prominent Bayesian, Frank Ramsey:

“(…) all our lives we are in a sense betting. Whenever we go to the station we are betting that a train will really run, and if we had not a sufficient degree of belief in this we should decline the bet and stay at home. The options God gives us are always conditional on our guessing whether a certain proposition is true.”

Another key idea in Lindley’s work is that it would behoove decision makers (and politicians in particular) to articulate their uncertainty explicitly.

In general, the main thrust of Thinking in Bets (i.e., life is a bet, we bet on our beliefs, and our beliefs are based on knowledge of the world) is firmly Bayesian, but -to the best of my knowledge- the word “Bayesian” does not occur in the book. For instance, the author writes:

“One might guess that scientists would be more accurate if they had to bet on the likelihood that results would replicate(…)” (Duke, 2018, p. 149)

This idea was already articulated by arch-Bayesian Augustus De Morgan. Early Bayesians such as Pierre-Simon Laplace already formulated their statistical conclusions in terms of what it meant as a bet.

Trap 2: Flirting with the Heterodox Academy

On several pages, the author extols the virtue of the “Heterodox Academy”. As the authors explains on p. 172:

“One of the chief concerns of Heterodox Academy is figuring out how to get conservatives to become social scientists or engage in exploratory thought with social scientists.”

I am not a US academic, and I did not know about the Heterodox Academy before reading Thinking in Bets, but this statement immediately raised a red flag for me. Yes, the general point seems innocent enough — of course it is wise to learn about differing viewpoints. Unfortunately, that general rule holds only when the opposing party has a minimum level of knowledge and is acting in good faith. Hobbits who seek the best way to mount Doom should not consult Sauron for directions. A school board intent to give children advice on a proper diet should not consult McDonald’s. A government intent on reducing the number of mass shootings should not consult the NRA. A Bayesian who develops a new test should not consult a frequentist. People who want fair and balanced reporting should not watch Fox News.

It is true that there is a political imbalance in academia: people whose main job is to think (and teach others how to think) overwhelmingly reject the idea of reducing tax on the super-wealthy, they reject the idea of separating children from their parents, and they reject a president that demeans the office. And it is true that this is a concern — but it is a concern not for academia but for conservatives. When the intellectual engine of the country rejects your policies and your politicians wholesale, it may be time to consider whether or not you are on the right track.

The book implies that social scientists would do well to watch Fox News to get a different perspective. Similarly, it may be suggested that the Elves from Middle-earth can learn a lot from attending the lectures by Saruman the White. Personally, I will watch Dancer in the Dark on repeat before I turn to Fox News.

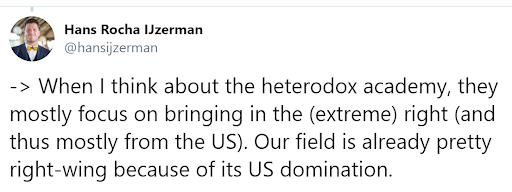

After writing this post, I came across a useful alternative perspective of the heterodox academy, from a tweet by Hans IIzerman:

So, instead of adopting the US-centric perspective that social scientists are too left-wing, we may adopt the more global perspective that social scientists in the US are currently too right-wing. In recognition of this more global perspective, will the heterodox academy start advocating that social scientists “engage in exploratory thought” with more progressive people rather than with conservatives? Somehow I doubt it.

Trap 3: Naive Ideas about Science

On p. 72, the author writes a paragraph about the MO in empirical science. My comments are in brackets:

“When scientists publish results of experiments, they share with the rest of their community their methods of gathering and analyzing the data, the data itself [unfortunately, this is still rare – EJ], and their confidence in that data [confidence in the conclusions, I assume; and unfortunately, researchers do not state their confidence – EJ]. That makes it possible for others to assess the quality of the information being presented, systematized through peer review before publication. Confidence in the results is expressed through both p-values [these do not express confidence in the results – EJ], the probability one would expect to get the result that was actually observed […or more extreme cases, and given that the null hypothesis is true – EJ] (akin to declaring your confidence on a scale of zero to ten) [No. If you want something like this, you need a Bayesian analysis – EJ], and confidence intervals (akin to declaring ranges of plausible alternatives) [No. Again, this requires a Bayesian analysis]. Scientists, by institutionalizing the expression of uncertainty, invite their community to share relevant information and to test and challenge the results and explanations.”

I am not blaming the author for providing an incorrect interpretation of p-values and confidence intervals. Nobody gets this right. But I am disappointed that the author does not know that the approach she advocates in her book (i.e., a learning cycle of betting on beliefs) is fundamentally incompatible with the statistical MO in the empirical sciences. In fact, p-values are a prime example of the black-and-white thinking that rightly provokes the author’s ire throughout her book. A value of p=.03 is not meant to express uncertainty and invite a challenge; more often, it is meant to shut reviewers up (“we’ve rejected the null hypothesis according to the standard statistical procedures in the field, now swallow our conclusions and publish this work”). Why most researchers have yet to adopt the approach sketched in the author’s book will be food for future philosophers of science.

There is more to say about Thinking in Bets, and I will continue this review next week. Let me stress again that –despite my critique above– this book sparks joy (4 out of 5 stars)!

References

Duke, A. (2018). Thinking in bets: Making smarter decisions when you don’t have all the facts. New York: Portfolio/Penguin.

Lindley, D. V. (1985). Making decisions (2nd ed.). London: Wiley.

Lindley, D. V. (2006). Understanding uncertainty. Hoboken: Wiley.

Ramsey, F. P. (1926). Truth and probability. In Braithwaite, R. B. (ed.), The foundations of mathematics and other logical essays, pp. 156-198. London: Kegan Paul.

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.