Several researchers have proposed that the capacity for mental control is a limited resource, one that can be temporarily depleted after having engaged in a taxing cognitive activity. This hypothetical phenomenon — called ego depletion — has been hotly debated, and its very existence has been called into question. We ourselves are in the midst of a multi-lab collaborative research effort to address the issue. This is why we were particularly intrigued, when, just a few days ago, the Twitterverse attended us to the preprint “Ego depletion reduces attentional control: Evidence from two high-powered preregistered experiments”. The abstract of the manuscript reads as follows:

“Two preregistered experiments with over 1000 participants in total found evidence of an ego depletion effect on attention control. Participants who exercised self-control on a writing task went on to make more errors on Stroop tasks (Experiment 1) and the Attention Network Test (Experiment 2) compared to participants who did not exercise self-control on the initial writing task. The depletion effect on response times was non-significant. A mini meta-analysis of the two experiments found a small (d = 0.20) but significant increase in error rates in the controlled writing condition, thereby providing clear evidence of poorer attention control under ego depletion. These results, which emerged from large preregistered experiments free from publication bias, represent the strongest evidence yet of the ego depletion effect.”

These are bold claims. The authors conducted two high-powered studies, with preregistration, and “found evidence”, “clear evidence”, “the strongest evidence yet”. Let us examine these claims with a critical eye. In order to do this, we will follow the maxim from Ibn al-Haytham (around 1025 AD):

“It is thus the duty of the man who studies the writings of scientists, if learning the truth is his goal, to make himself an enemy of all that he reads, and, applying his mind to the core and margins of its content, attack it from every side.”

Praise

Before we “attack” (in a respectful and constructive manner), it is imperative that we first heap praise on the authors, for the following reasons:

- The experiments involved over 1000 participants. Any critique needs to be mindful of the tremendous effort that went into data collection.

- The experiments were preregistered, and the preregistration forms have been made available on the OSF. This is still not the norm, and the authors are definitely raising the bar for research in their field.

- The submitted manuscript has been made available as a preprint, informing other researchers and allowing them to provide suggestions for improvement.

Overall, then, the authors ought to be congratulated, not just on the effort they invested, but also on the level of transparency with which the work has been conducted. But a strong anvil need not fear the hammer, and it is in this spirit that we offer the following constructive critique.

Two Pitfalls

On a first reading we focused on the key statistical result. As the authors mention:

“The key prediction was a main effect of prior self-control, such that participants who exert self-control at Time 1 would perform worse at Time 2.”

For instance, when analyzing the results from Experiment 1, the authors report:

“Additionally, we found the predicted main effect of writing condition, F(1, 653) = 4.84, p = .028, ηp2 = .007, d = 0.15, such that participants made errors at a higher rate in the controlled writing condition (M = 0.064, SD = 0.046) compared to the free writing condition (M = 0.057, SD = 0.040).”

So here we have a p-value of .028 — relatively close to the .05 boundary. In addition, the sample size is relatively large, which means that the effect size is modest. Can this really be compelling evidence? We were ready to start our Bayesian reanalysis (which we will now present in next week’s post) but then we stumbled upon a description of the study on Research Digest from the British Psychological Society. At some point, this article mentions:

“There is a complication with the second study. When the researchers removed outlier participants, as they said they would in their preregistered plans (for instance because participants were particularly slow or fast to respond, or made a particularly large number of mistakes), then there was no longer a significant difference in performance on the Attention Network Test between participants who’d performed the easy or difficult version of the writing task.”

This off-hand comment prompted us to inspect the preregistration forms on the Open Science Framework. The form for Experiment 1 is here and the one for Experiment 2 is here. These forms reveal two key concerns.

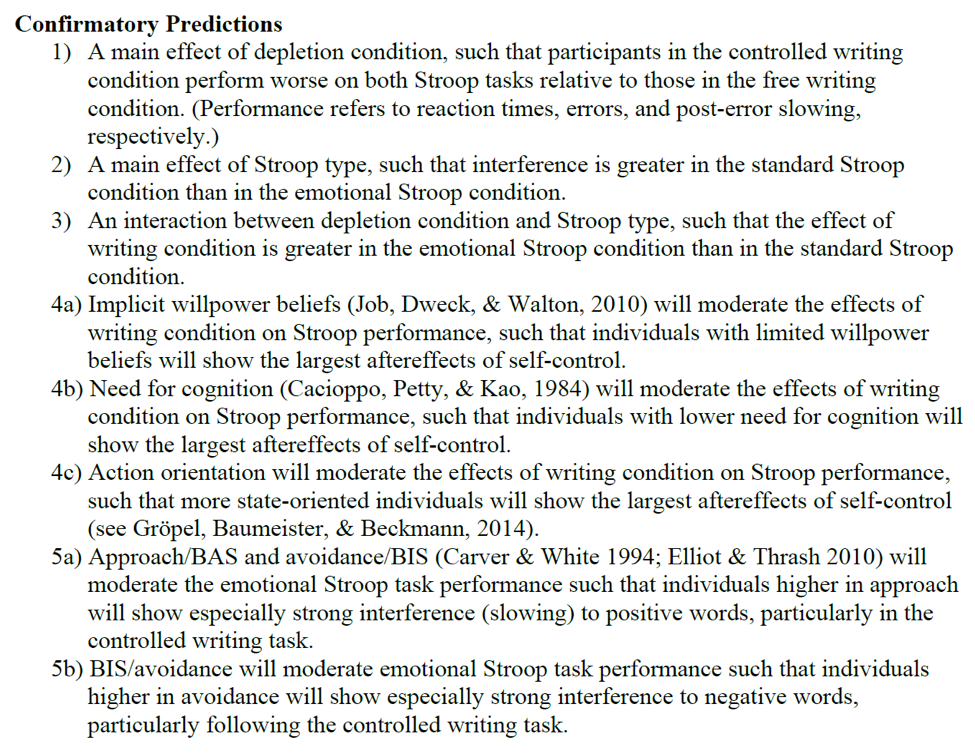

- The first concern is that the authors preregistered several predictions. For the Stroop task (Experiment 1), for instance, a total of eight specific predictions were listed:

When several of the failed predictions are cast aside (i.e., presented in an online supplement), and all of the “significant” results are highlighted (i.e., presented in the main text), this can create a warped impression of the total evidence. Concretely, when eight predictions have been preregistered (and each is tested with three dependent measures, see below), and the results yield one significant p-value at p = .028, this may not do much to convince a skeptic.

Admittedly, from context one may deduce that the main test was the first one that is listed, but this is not stated in the preregistration protocol. And even for the first test, the authors mention that “performance” is assessed using three dependent measures (i.e., reaction times, errors, and post-error slowing). This effectively gives the phenomenon three shots at the statistical bullseye, and some statistical correction may be appropriate, at least for analyses conducted within the frequentist paradigm.

- The second concern is that, as asserted in Research Digest, the analysis plan was not followed to the letter, and the current draft of the manuscript fails to signpost this appropriately (although it does contain some hints). Specifically, the preregistration plans articulate a number of reasonable exclusion criteria. In the manuscript itself, however, the main analyses are always presented with and without application of the exclusion criteria — in fact, the analysis that was not preregistered is always presented first. For Experiment 2, the exclusion criteria matter: with the preregistered exclusion criteria in place, there appears to be little trace of the critical effect:

“When we excluded participants based on the specified criteria, the effects of writing condition changed from the findings reported above. Specifically, the main effect of writing task on error rates became non-significant, F(1, 335) = 1.42, p = .235, ηp2 = .004, d = 0.14”

What Now?

The second concern is easy to fix. In our opinion, the results without outlier exclusion (i.e., the analysis that was not preregistered) should be presented in the section “exploratory analyses”, and not in the section that precedes it. After all, the very purpose of preregistration is to prevent the kind of hindsight biases that can drive researchers to make post-hoc decisions to bring the data better in line with expectations.

The first concern deals with multiplicity, and is not as easy to fix. We believe that at least some frequentist statisticians would argue that the eight tests constitute a single family, and that a correction for multiplicity is in order. And it appears to us that most frequentist statisticians would agree that the three key tests on performance (for reaction times, error rates, and post-error slowing) are definitely part of a single family. At any rate, it should be made clear to the reader that only one out of several tests and predictions yielded a significant result at the .05 level. Specifically, the main text should report the outcome for all preregistered (confirmatory) tests. By relegating some of the non-significant findings to an online supplement, the authors unwittingly provide a skewed impression of the evidence. Note that for each prediction, a single sentence would suffice: “Prediction X was not corroborated by the data, F(x,y) = z, p > .1 (see online supplements for details)”.

Take-Home Messages

- When preregistering an analysis plan, it is important to be mindful of multiplicity. This is a conceptually challenging matter, and in earlier research one of us [EJ] has also preregistered relatively many predictions and hypotheses. For frequentists, what matters is whether the set of predictions constitutes a single family. For Bayesians, what matters is the prior plausibility of the hypotheses under test. One may not, however, mix the paradigms and adopt the Bayesian mindset while executing the frequentist analysis.

- The preregistration protocol must be followed religiously. Analyses that deviate from the protocol must be clearly labeled as such. We recommend that all (!) confirmatory analyses are presented in a section in the main text, “confirmatory analyses”; exploratory analyses should be presented in a section “exploratory analyses”.

- Whenever it becomes clear that protocols have not been followed to the letter (e.g., outcome switching has occurred), this does not necessarily indicate a weakness of preregistration. In fact, it underscores its strength, for without the preregistration document there would be no way to learn of the extent to which the authors adhered to the protocol.

- A registered report (Chris Chambers’ proposal, see https://cos.io/rr/) also features preregistration, but, critically, it also includes external referees who first review the preregistered analysis plan and then check it against the reported outcomes. This reduces the possibility of outcome switching.

- As preregistration becomes more popular, we expect the field to struggle for a while before getting to grips with the new procedure.

To conclude, the authors of the ego-depletion paper are to be congratulated on their work, but the transparency of the resulting manuscript can be improved further.

Like this post?

Subscribe to the JASP newsletter to receive regular updates about JASP including the latest Bayesian Spectacles blog posts! You can unsubscribe at any time.

References

Garrison, K. E., Finley, A. J., & Schmeichel, B. J. (2017). Ego depletion reduces attentional control: Evidence from two high-powered preregistered experiments. Manuscript submitted for publication. URL: https://psyarxiv.com/pgny3/.

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.

Quentin Gronau

Quentin is a PhD candidate at the Psychological Methods Group of the University of Amsterdam.