This post is an extended synopsis of van Doorn, J., van den Bergh, D., Dablander, F., Derks, K., van Dongen, N.N.N., Evans, N. J., Gronau, Q. F., Haaf, J.M., Kunisato, Y., Ly, A., Marsman, M., Sarafoglou, A., Stefan, A., & Wagenmakers, E.‐J. (2021), Strong public claims may not reflect researchers’ private convictions. Significance, 18, 44-45. https://doi.org/10.1111/1740-9713.01493. Preprint available on PsyArXiv: https://psyarxiv.com/pc4ad

Abstract

How confident are researchers in their own claims? Augustus De Morgan (1847/2003) suggested that researchers may initially present their conclusions modestly, but afterwards use them as if they were a “moral certainty”1. To prevent this from happening, De Morgan proposed that whenever researchers make a claim, they accompany it with a number that reflects their degree of confidence (Goodman, 2018). Current reporting procedures in academia, however, usually present claims without the authors’ assessment of confidence.

Questionnaire

Here we report the partial results from an anonymous questionnaire on the concept of evidence that we sent to 162 corresponding authors of research articles and letters published in Nature Human Behaviour (NHB). We received 31 complete responses (response rate: 19%). A complete overview of the questionnaire can be found in online Appendices B, C, and D. As part of the questionnaire, we asked respondents two questions about the claim in the title of their NHB article: “In your opinion, how plausible was the claim before you saw the data?” and “In your opinion, how plausible was the claim after you saw the data?”. Respondents answered by manipulating a sliding bar that ranged from 0 (i.e., “you know the claim is false”) to 100 (i.e., “you know the claim is true”), with an initial value of 50 (i.e., “you believe the claim is equally likely to be true or false”).

Results

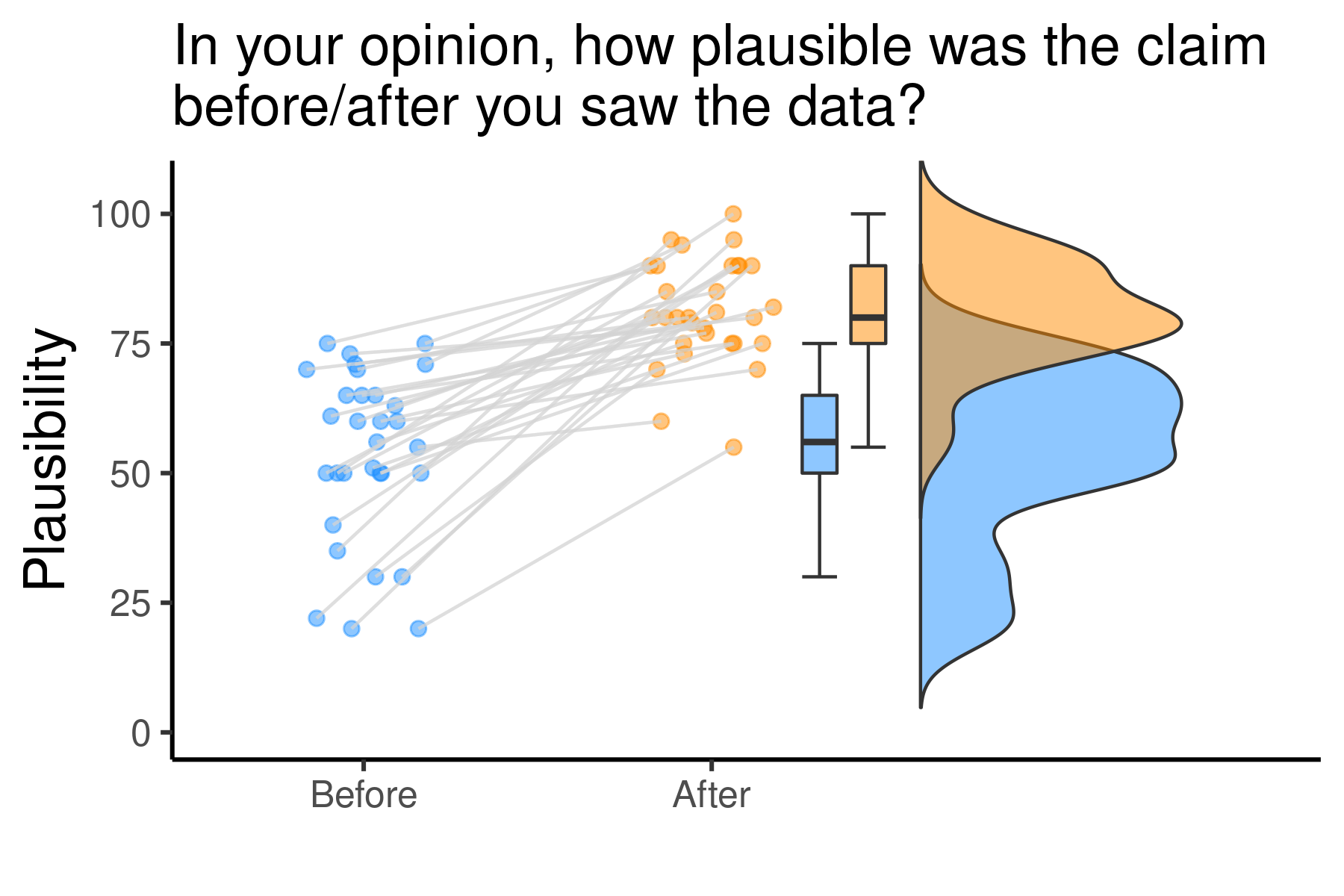

Figure 1 shows the responses to both questions. The blue dots quantify the assessment of prior plausibility. The highest prior plausibility is 75, and the lowest is 20, indicating that (albeit with the benefit of hindsight) the respondents did not set out to study claims that they believed to be either outlandish or trivial. Compared to the heterogeneity in the topics covered, this range of prior plausibility is relatively narrow.

From the difference between prior and posterior odds we can derive the Bayes factor, that is, the extent to which the data changed researchers’ conviction. The median of this informal Bayes factor is 3, corresponding to the interpretation that the data are 3 times more likely to have occurred under the hypothesis that the claim is true than under the hypothesis that the claim is false.

Figure 1. All 31 respondents indicated that the data made the claim in the title of their NHB article more likely than it was before. However, the size of the increase is modest. Before seeing the data, the plausibility centers around 50 (median = 56); after seeing the data, the plausibility centers around 75 (median = 80). The gray lines connect the responses for each respondent.

Concluding Comments

The authors’ modesty appears excessive. It is not reflected in the declarative title of their NHB articles, and it could not reasonably have been gleaned from the content of the articles themselves. Empirical disciplines do not ask authors to express the confidence in their claims, even though this could be relatively simple. For instance, journals could ask authors to estimate the prior/posterior plausibility, or the probability of a replication yielding a similar result (e.g., (non)significance at the same alpha level and sample size), for each claim or hypothesis under consideration, and present the results on the first page of the article. When an author publishes a strong claim in a top-tier journal such as NHB, one may expect this author to be relatively confident. While the current academic landscape does not allow authors to express their uncertainty publicly, our results suggest that they may well be aware of it. Encouraging authors to express this uncertainty openly may lead to more honest and nuanced scientific communication (Kousta, 2020).

References

De Morgan, A. (1847/2003). Formal Logic: The Calculus of Inference, Necessary and Probable. Honolulu: University Press of the Pacific.

Goodman, S. N. (2018). How sure are you of your result? Put a number on it. Nature, 564, 7.

Kousta, S. (Ed.). (2020). Editorial: Tell it like it is. Nature Human Behavior, 4, 1.

About The Authors

Johnny van Doorn

Johnny van Doorn is a PhD candidate at the Psychological Methods department of the University of Amsterdam.

- See also our earlier blog post on this topic: https://www.bayesianspectacles.org/a-171-year-old-suggestion-to-promote-open-science/