This post is an extended synopsis of Hoogeveen, S., Sarafoglou A., & Wagenmakers, E.-J. (2019). Laypeople Can Predict Which Social Science Studies Replicate. Preprint available on PsyArXiv:https://psyarxiv.com/egw9d.

Abstract

Large-scale collaborative projects recently demonstrated that several key findings from the social science literature could not be replicated successfully. Here we assess the extent to which a finding’s replication success relates to its intuitive plausibility. Each of 27 high-profile social science findings was evaluated by 233 people without a PhD in psychology. Results showed that these laypeople predicted replication success with above-chance performance (i.e., 58%). In addition, when laypeople were informed about the strength of evidence from the original studies, this boosted their prediction performance to 67%. We discuss the prediction patterns and apply signal detection theory to disentangle detection ability from response bias. Our study suggests that laypeople’s predictions contain useful information for assessing the probability that a given finding will replicate successfully.

In the experiment, participants (N = 233) were presented with 27 studies, a subset of the studies included in the Social Sciences Replication Project (SSRP; Camerer et al., 2018) and the Many Labs 2 Project (ML2; Klein et al., 2018). For each study, participants read a short description of the research question, its operationalization, and the key finding. The descriptions were inspired by those provided in SSRP and ML2, but rephrased to make them comprehensible for laypeople. For each of the 27 studies, all participants first answered a dichotomous item about estimated replicability (yes / no) and then indicated the confidence in their choice on a 0-100 scale.

For example, consider the description of the research hypothesis by Anderson, Kraus, Galinsky, & Keltner (2012): “Do social comparisons influence people’s well-being? Participants read a description of a person and were instructed to think about similarities and differences between them. In one group, the person was described as respected and liked by all their social groups. In the other group, the person was described as not respected and liked in any of their social groups. Afterwards, participants answered questions about their well-being. If participants compared themselves with a highly respected and liked person, participants reported lower well-being.”

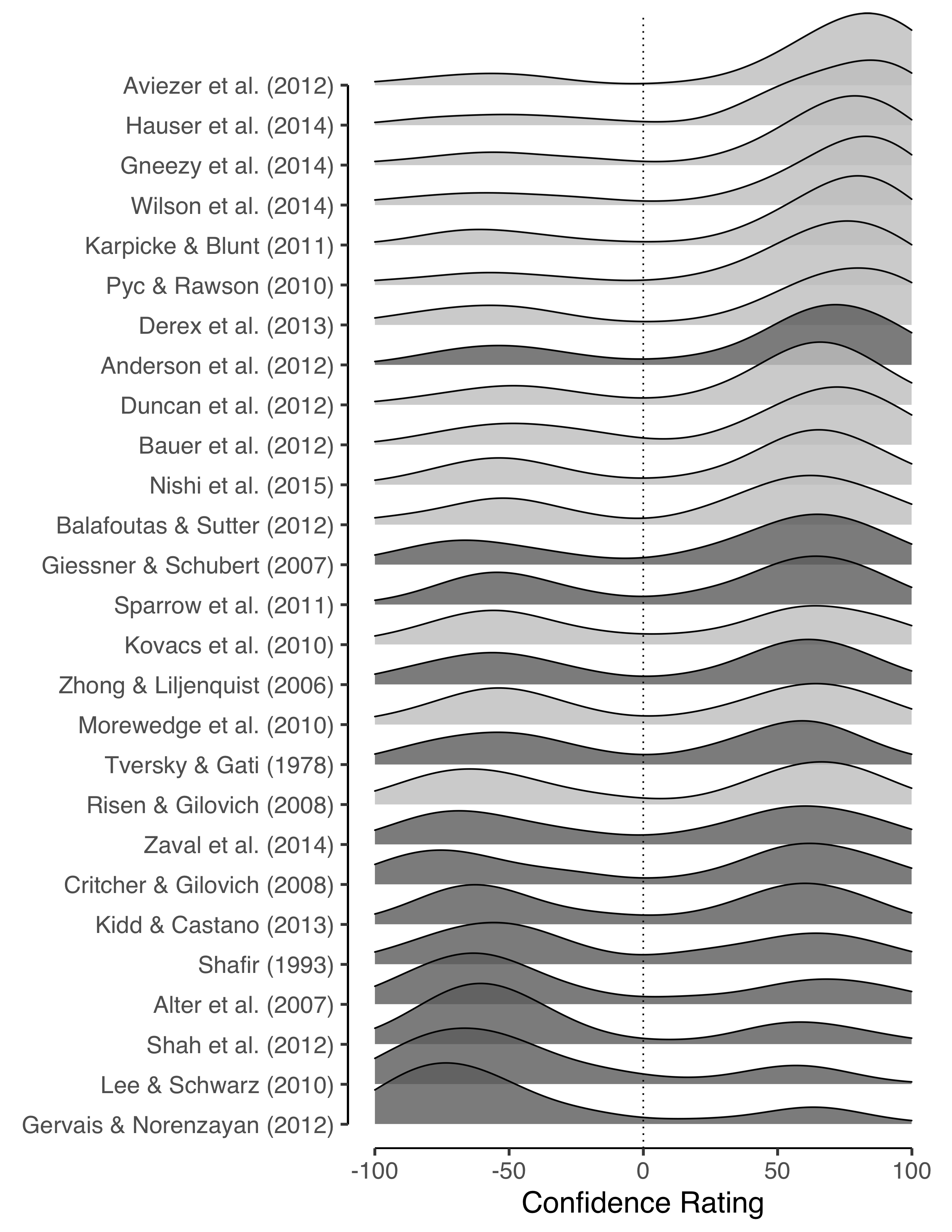

Figure 1 displays participants’ confidence ratings concerning the replicability of each of the 27 included studies, ordered according to the averaged confidence score. Positive ratings reflect confidence in replicability, and negative ratings reflect confidence in non-replicability, with -100 denoting extreme confidence that the effect would fail to replicate. Note that these data are aggregated across the Description Only and the Description Plus Evidence condition. The top ten rows indicate studies for which laypeople showed relatively high agreement that the associated studies would replicate. Out of these ten studies, nine replicated and only one did not (i.e., the previously mentioned study by Anderson et al., 2012; note that light-grey indicates a successful replication, and dark-grey indicates a failed replication). The bottom four rows indicate studies for which laypeople showed relatively high agreement that the associated studies would fail to replicate. Consistent with laypeople’s predictions, none of these four studies replicated. For the remaining 13 studies in the middle rows, the group response was relatively ambiguous, as reflected by a bimodal density that is roughly equally distributed between the negative and positive end of the scale. Out of these 13 studies, five replicated successfully and eight failed to replicate successfully. Overall, Figure 1 provides a compelling demonstration that laypeople are able to predict whether or not high-profile social science findings will replicate successfully.

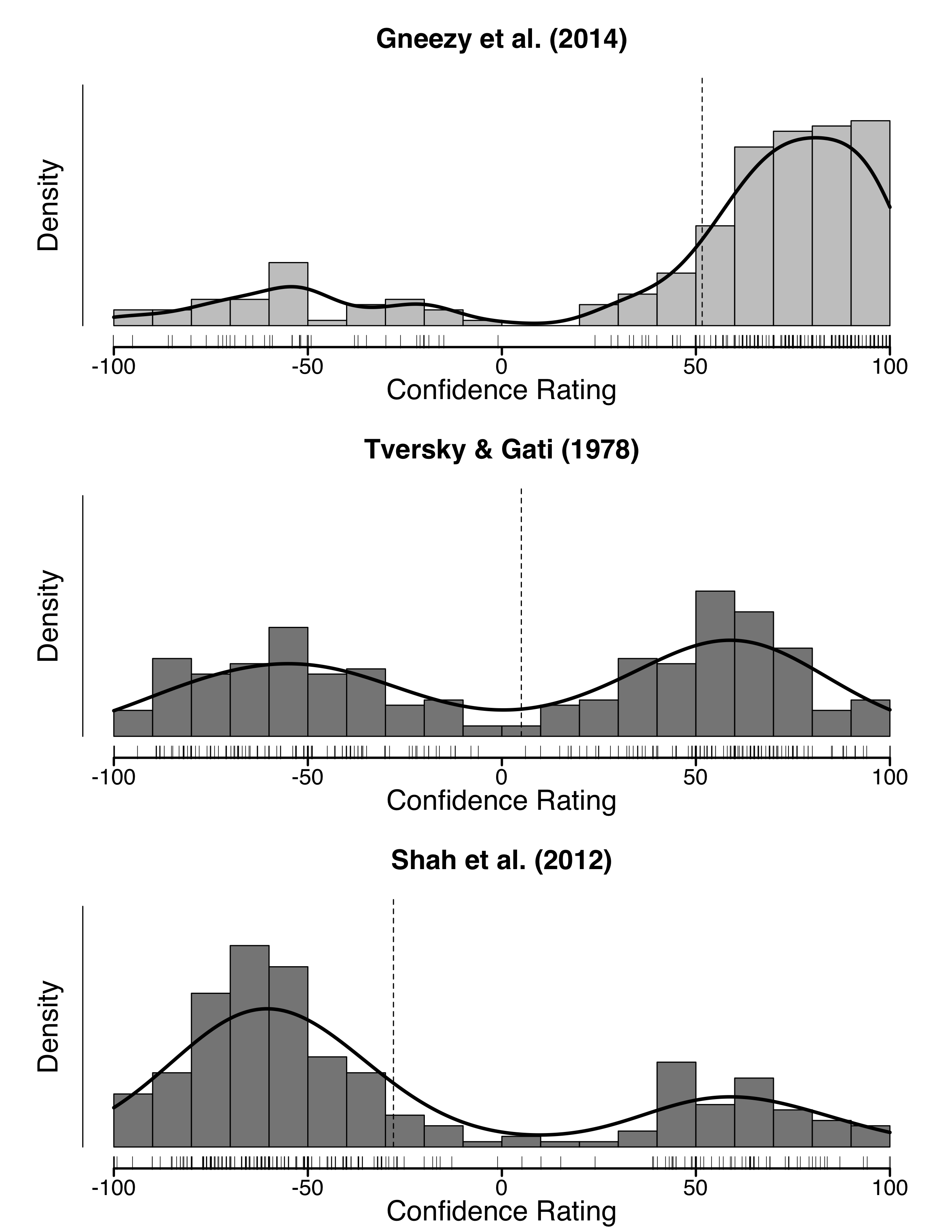

Figure 2 provides a more detailed account of the data for three selected studies. For the study in the top panel (i.e., Gneezy, Keenan, & Gneezy, 2014), most laypeople correctly predicted that the effect would successfully replicate; for the study in the middle panel (i.e., Tversky & Gati, 1978), laypeople showed considerable disagreement, with slightly over half of the participants incorrectly predicting that the study would replicate successfully; finally, for the study in the bottom panel (i.e., Shah, Mullainathan, & Shafir, 2012), most laypeople correctly predicted that the effect would fail to replicate.

Figure 1: Laypeople’s near unanimous judgments are highly predictive of replication outcomes. Light density distributions reflect studies that successfully replicated, dark grey distributions reflect studies that did not replicate. Confidence ratings are aggregated over both experimental conditions. Negative values reflect the ‘does not replicate’ prediction, and positive values the ‘replicates’ prediction.

Figure 2: Histograms of confidence ratings for three studies for which laypeople were nearly unanimous in their belief that the study will either replicate (Gneezy et al., 2014, top panel) or will not replicate (Shah et al., 2012, bottom panel) or for which they are ambiguous (Tversky & Gati, 1978, middle panel). The vertical dotted line shows the average confidence rating for the respective study (i.e., group prediction).

References

Anderson, C., Kraus, M. W., Galinsky, A. D., & Keltner, D. (2012). The local-ladder effect: Social status and subjective well-being. Psychological Science, 23, 764–771

Camerer, C. F., Dreber, A., Holzmeister, F., Ho, T. H., Huber, J., Johannesson, M., … & Altmejd, A. (2018). Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015. Nature Human Behaviour, 2, 637–644

Gneezy, U., Keenan, E. A., & Gneezy, A. (2014). Avoiding overhead aversion in charity.

Science, 346, 632–635

Klein, R. A., Vianello, M., Hasselman, F., Adams, B. G., Adams Jr, R. B., Alper, S., … & Batra, R. (2018). Many Labs 2: Investigating variation in replicability across samples and settings. Advances in Methods and Practices in Psychological Science, 1, 443-490

Shah, A. K., Mullainathan, S., & Shafir, E. (2012). Some consequences of having too little.

Science, 338, 682–685

Tversky, A., & Gati, I. (1978). Studies of similarity. Cognition and Categorization,1, 79–98.

About The Authors

Suzanne Hoogeveen

Suzanne Hoogeveen is a PhD candidate at the Department of Social Psychology at the University of Amsterdam.

Alexandra Sarafoglou

Alexandra Sarafoglou is a PhD candidate at the Psychological Methods Group at the University of Amsterdam.

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.