This post is an extended synopsis of Stefan, A. M., Katsimpokis, D., Gronau, Q. F. & Wagenmakers, E.-J. (2021). Expert agreement in prior elicitation and its effects on Bayesian inference. Preprint available on PsyArXiv: https://psyarxiv.com/8xkqd/

Abstract

Bayesian inference requires the specification of prior distributions that quantify the pre-data uncertainty about parameter values. One way to specify prior distributions is through prior elicitation, an interview method guiding field experts through the process of expressing their knowledge in the form of a probability distribution. However, prior distributions elicited from experts can be subject to idiosyncrasies of experts and elicitation procedures, raising the spectre of subjectivity and prejudice. In a new pre-print, we investigate the effect of interpersonal variation in elicited prior distributions on the Bayes factor hypothesis test. We elicited prior distributions from six academic experts with a background in different fields of psychology and applied the elicited prior distributions as well as commonly used default priors in a re-analysis of 1710 studies in psychology. The degree to which the Bayes factors vary as a function of the different prior distributions is quantified by three measures of concordance of evidence: We assess whether the prior distributions change the Bayes factor direction, whether they cause a switch in the category of evidence strength, and how much influence they have on the value of the Bayes factor. Our results show that although the Bayes factor is sensitive to changes in the prior distribution, these changes rarely affect the qualitative conclusions of a hypothesis test. We hope that these results help researchers gauge the influence of interpersonal variation in elicited prior distributions in future psychological studies. Additionally, our sensitivity analyses can be used as a template for Bayesian robustness analyses that involves prior elicitation from multiple experts.

Different experts – different priors?

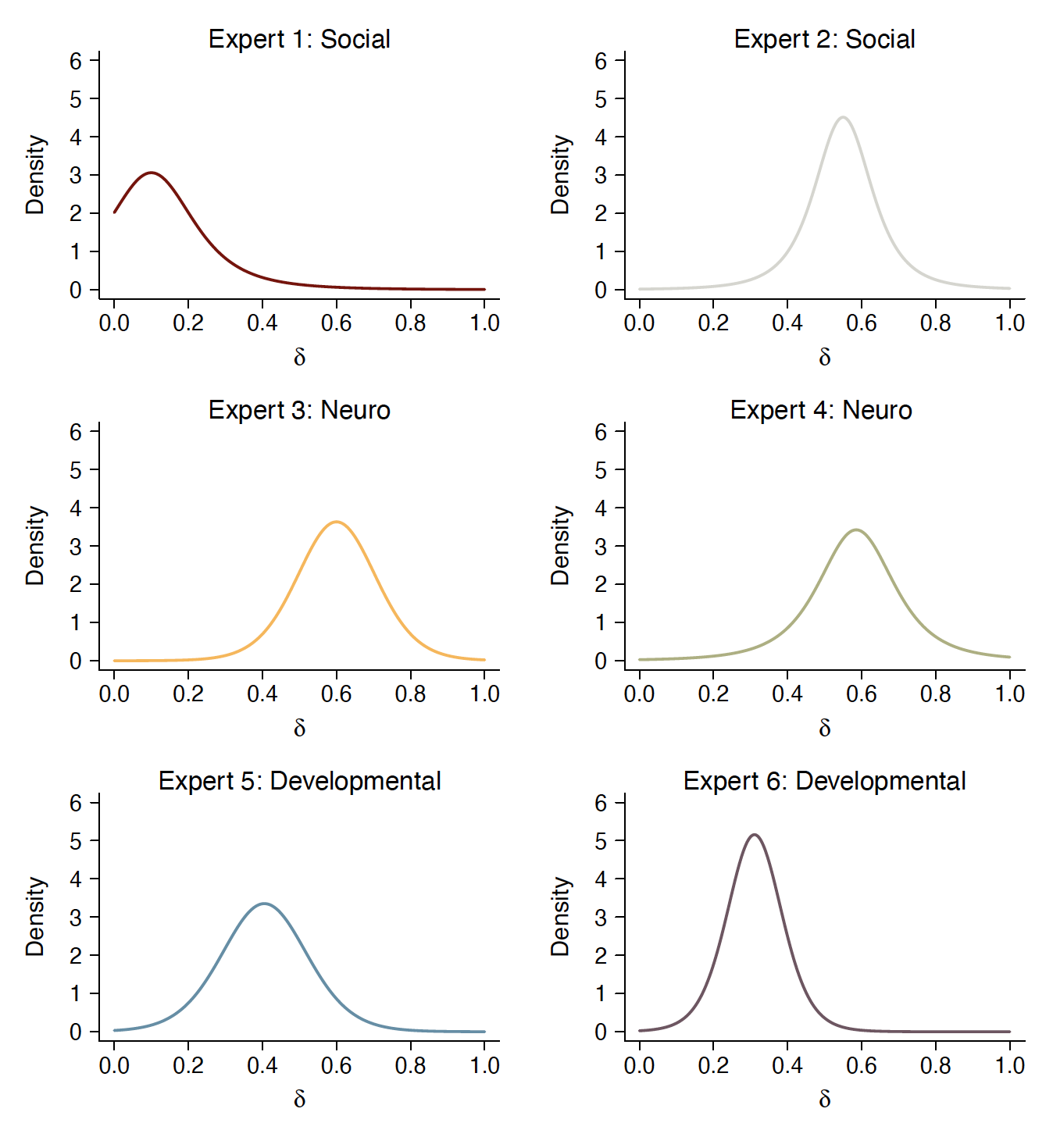

The goal of a prior elicitation effort is to formulate a probability distribution that represents the subjective knowledge of an expert. This probability distribution can then be used as a prior distribution on parameters in a Bayesian model. Parameter values the expert deems plausible receive a higher probability density, parameter values the expert deems implausible receive a lower probability density. Of course, most of us know from personal experience that experts can differ in their opinions. But to what extent will these differences influence elicited prior distributions? Here, we asked six experts from different fields in psychology about plausible values for small-to-medium effect sizes in their field. Below, you can see the elicited prior distribution for Cohen’s d for all experts alongside with their respective fields of research.

As can be expected, no two elicited distributions are exactly alike. However, the prior distributions, especially the distributions of Expert 2-5, are remarkably similar. Expert 1 deviated from the other experts in that they expected substantially lower effect sizes. Expert 6 displayed less uncertainty than the other experts.

Different priors – different hypothesis testing results?

After eliciting prior distributions from experts, the next question we ask is: To what extent do differences in priors influence the results of Bayesian hypothesis testing? In other words, how sensitive is the Bayes factor to interpersonal variation in the prior? This question addresses a frequently voiced concern about Bayesian methods: Results of Bayesian analyses could be influenced by arbitrary features of the prior distribution.

To investigate the sensitivity of the Bayes factor to the interpersonal variation in elicited priors, we applied the elicited prior distributions to a large number of re-analyses of studies in psychology. Specifically, for elicited priors on Cohen’s d, we re-analyzed t-tests from a database assembled by Wetzels et al. (2011) that contains 855 t-tests from the journals Psychonomic Bulletin & Review and the Journal of Experimental Psychology: Learning, Memory, and Cognition. In each test, we used the elicited priors as prior distribution on Cohen’s d in the alternative model.

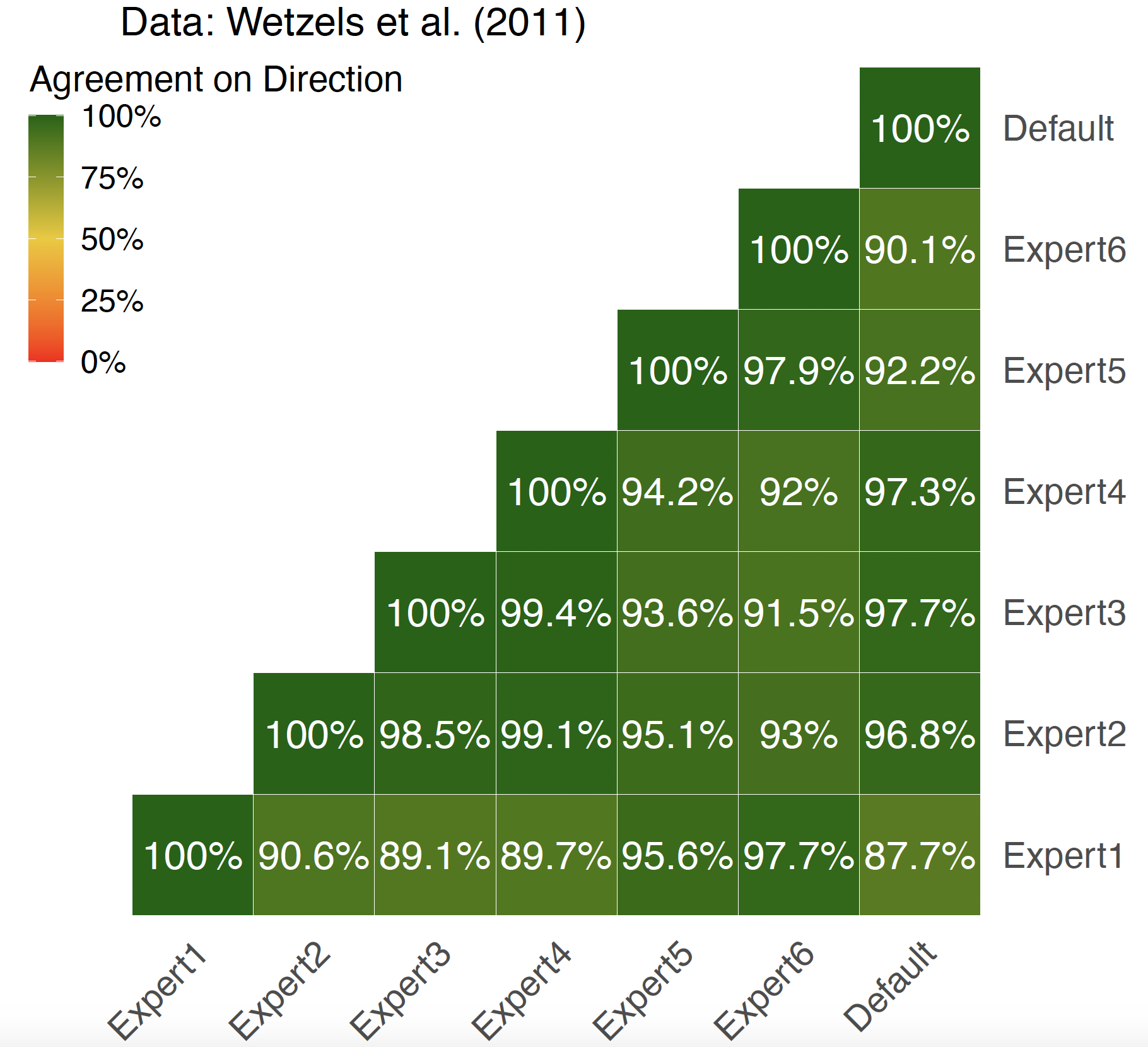

What does it mean if a Bayes factor is sensitive to the prior? Here, we used three criteria: First, we checked for all combinations of prior distributions how often a change in priors led to a change in the direction of the Bayes factor. We recorded a change in direction if the Bayes factor showed evidence for the null model (i.e., BF10 < 1) for one prior and evidence for the alternative model (i.e., BF10 > 1) for a different prior. Agreement was conversely defined as both Bayes factors being larger or smaller than one. As can be seen below, agreement rates were generally high for all combinations of prior distributions.

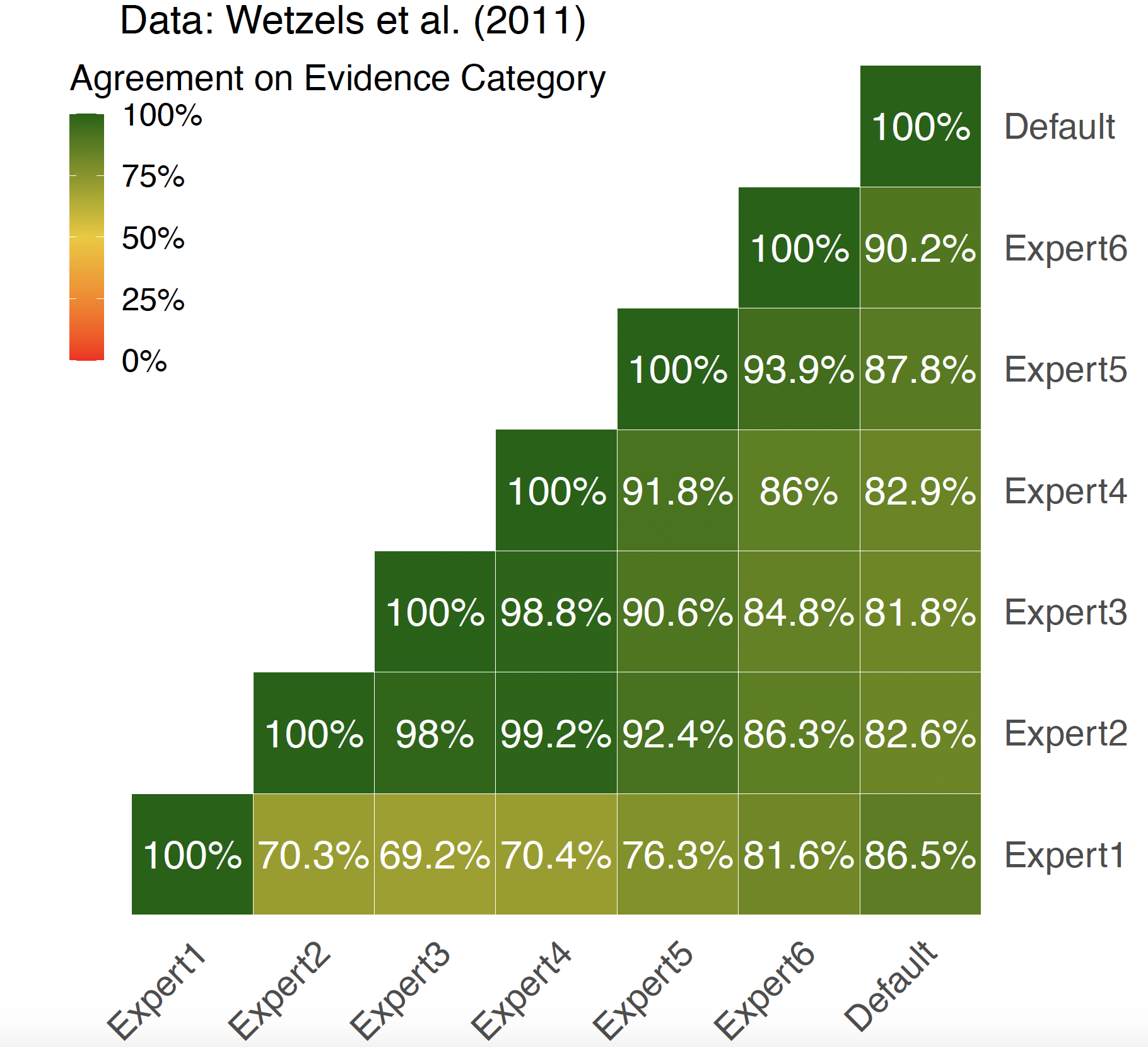

As a second sensitivity criterion, we recorded changes in the evidence category of the Bayes factor. Often, researchers are interested in whether a hypothesis test provides strong evidence in favor of the alternative hypothesis (e.g., BF10 > 10), strong evidence in favor of the null hypothesis (e.g., BF10 < 1/10), or inconclusive evidence (e.g., 1/10 < BF10 < 10). Thus, they classify the Bayes factor as belonging to one of three evidence categories. We recorded whether different priors led to a change in these evidence categories, that is, whether one Bayes factor would be classified as strong evidence, while a Bayes factor using a different prior would be classified as inconclusive evidence or strong evidence in favor of the other hypothesis. From the figure below, we can see that overall the agreement of Bayes factors with regard to evidence category is slightly lower than the agreement with regard to direction. However, this can be expected since evaluating agreement across two cut-points will generally result in lower agreement than evaluating agreement across a single cut-point.

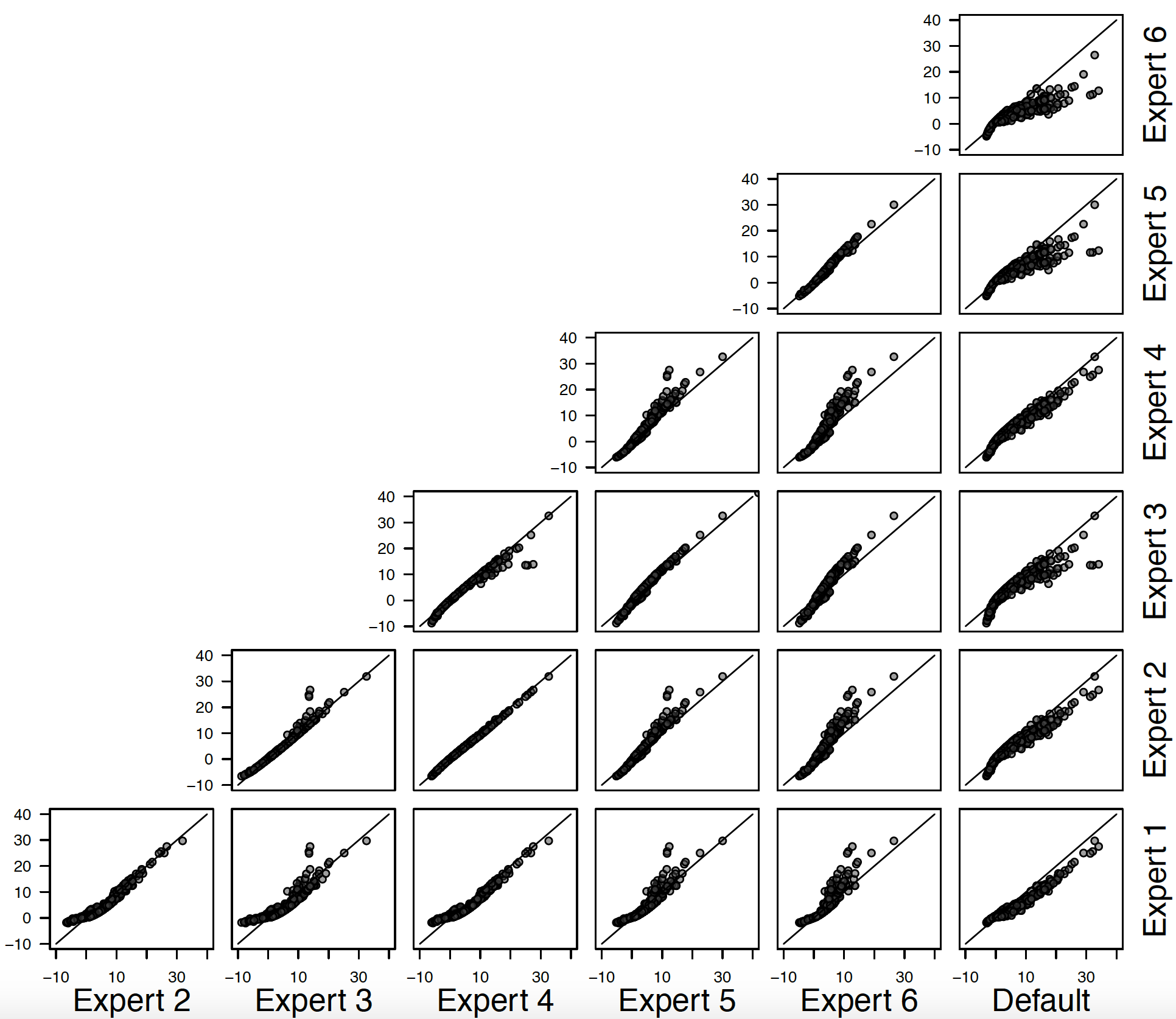

As a third aspect of Bayes factor sensitivity we investigated changes in the exact Bayes factor value. The figure below shows the correspondence of log Bayes factors for all experts and all tests in the Wetzels et al. (2011) database. What becomes clear is that Bayes factors are not always larger or smaller for one prior distribution compared to another, but that the relation differs per study. In fact, the effect size in the sample determines which prior distribution yields the highest Bayes factor in a study. Sample size has an additional effect, with larger sample sizes leading to more pronounced differences between Bayes factors for different prior distributions.

Conclusions

The sensitivity of the Bayes factor has often been a subject of discussion in previous research. Our results show that the Bayes factor is sensitive to the interpersonal variability between elicited prior distributions. Even for moderate sample sizes, differences between Bayes factors with different prior distributions can easily range in the thousands. However, our results also indicate that the use of different elicited prior distributions rarely changes the direction of the Bayes factor or the category of evidence strength. Thus, the qualitative conclusions of hypothesis tests in psychology rarely change based on the prior distribution. This insight may increase the support for informed Bayesian inference among researchers who were sceptical that the subjectivity prior distributions might determine the qualitative outcomes of their Bayesian hypothesis tests.

References:

Wetzels, R., Matzke, D., Lee, M. D., Rouder, J. N., Iverson, G. J., & Wagenmakers, E. –J. (2011). Statistical evidence in experimental psychology: An empirical comparison using 855 t tests. Perspectives on Psychological Science, 6(3), 291–298. https://doi.org/10.1177/1745691611406923

Stefan, A., Katsimpokis, D., Gronau, Q. F., & Wagenmakers, E.-J. (2021). Expert agreement in prior elicitation and its effects on Bayesian inference. PsyArXiv Preprint. https://doi.org/10.31234/osf.io/8xkqd

About The Authors

Angelika Stefan

Angelika is a PhD candidate at the Psychological Methods Group of the University of Amsterdam.

Dimitris Katsimpokis

Dimitris Katsimpokis is a PhD student at the University of Basel.

Quentin F. Gronau

Quentin is a PhD candidate at the Psychological Methods Group of the University of Amsterdam & postdoctoral fellow working on stop-signal models for how we cancel and modify movements and on cognitive models for improving the diagnosticity of eyewitness memory choices.

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.