This post is an extended synopsis of Dutilh, G., Sarafoglou, A., & Wagenmakers, E.-J. (in press). Flexible yet fair: Blinding analyses in experimental psychology. Synthese. Preprint available on PsyArXiv: https://psyarxiv.com/h39jt

Abstract

The replicability of findings in experimental psychology can be improved by distinguishing sharply between hypothesis-generating research and hypothesis-testing research. This distinction can be achieved by preregistration, a method that has recently attracted widespread attention. Although preregistration is fair in the sense that it inoculates researchers against hindsight bias and confirmation bias, preregistration does not allow researchers to analyze the data flexibly without the analysis being demoted to exploratory. To alleviate this concern we discuss how researchers may conduct blinded analyses (MacCoun & Perlmutter, 2015). As with preregistration, blinded analyses break the feedback loop between the analysis plan and analysis outcome, thereby preventing cherry-picking and significance seeking. However, blinded analyses retain the flexibility to account for unexpected peculiarities in the data. We discuss different methods of blinding, offer recommendations for blinding of popular experimental designs, and introduce the design for an online blinding protocol.

Summary

In recent years, large-scale replication studies revealed what some had foreseen (e.g., Ioannidis, 2005): psychological science appears to suffer from a replication rate that is alarmingly low. One of the contributing factors to the low replication rate is that researchers generally do not have to state their data analysis plans beforehand. Consequently, the reported hypothesis tests run the risk of being misleading and unfair: the tests can be informed by the data, and when this happens the tests lose their predictive interpretation, and, with it, their statistical validity (e.g., Chambers, 2017; De Groot, 1956/2014; Feynman, 1998; Gelman & Loken, 2014; Goldacre, 2009; Munafò et al., 2017; Peirce, 1878, 1883; Wagenmakers, Wetzels, Borsboom, van der Maas, & Kievit, 2012). In other words, researchers may implicitly or explicitly engage in cherry-picking and significance seeking.

Lack of flexibility in preregistration. To break the feedback loop between analysis plan and analysis outcome, and thus prevent hindsight bias from contaminating the conclusions, it has been suggested that researchers should tie their hands and preregister their studies by providing a detailed analysis plan in advance of data collection (e.g., De Groot, 1969). Preregistration is a powerful and increasingly popular method to raise the reliability of empirical results. Nevertheless, the appeal of preregistration is lessened by its lack of flexibility: once an analysis plan has been preregistered, that plan needs to be executed mechanically in order for the test to retain its confirmatory status. The advantage of such a mechanical execution is that it prevents significance seeking; the disadvantage is that it also prevents the selection of statistical models that are appropriate in light of the data. The core problem is that preregistration does not discriminate between “significance seeking” (which is bad) and “using appropriate statistical models to account for unanticipated peculiarities in the data” (which is good). Preregistration paints these adjustments with the same brush, considering both to be data-dependent and hence exploratory.

An alternative to preregistration: blinding analyses. In this article, we argue that in addition to preregistration, blinding of analyses can play a crucial role in improving the replicability and productivity of psychological science (e.g., Heinrich, 2003; MacCoun & Perlmutter, 2015; MacCoun & Perlmutter, 2017). All methods of analysis blinding aim to hide the analysis outcome from the analyst. Only after the analyst has settled upon a definitive analysis plan is the outcome revealed. A blinding procedure thus requires at least two parties: a data manager who blinds the data and an analyst who designs the analyses. By interrupting the feedback loop between results and outcomes, blinding eliminates an important source of researcher bias.

Similar to preregistration, analysis blinding serves to prevent significance seeking and to inoculate researchers against hindsight bias and confirmation bias (e.g., Conley et al., 2006; Robertson & Kesselheim, 2016). But in contrast to preregistration, for confirmatory tests analysis blinding does not prevent the selection of statistical models that are appropriate in light of the observed data. We believe that blinding, in particular when combined with preregistration, makes for an ideal procedure that allows for flexibility in analyses while retaining the virtue of truly confirmatory hypothesis testing.

Example: Apply analysis blinding in a regression design. Analyses may be blinded in various ways and the selection of the appropriate blinding methodology requires careful thought. As Conley et al. (2006, p. 10) write: “[…] the goal is to hide as little information as possible while still acting against experimenter bias.” Thus, a first consideration is how much to distort a variable of interest. The distortion should be strong enough to hide any existing effects of key interest, yet small enough to still allow for sensible selection of an appropriate statistical model (J. R. Klein & Roodman, 2005). A second consideration is that some relationships in the data may need to be left entirely untouched. For example, in a regression design it is important to know about the extent to which predictors correlate. If this collinearity is not part of the hypothesis, the applied blinding procedure should leave it intact.

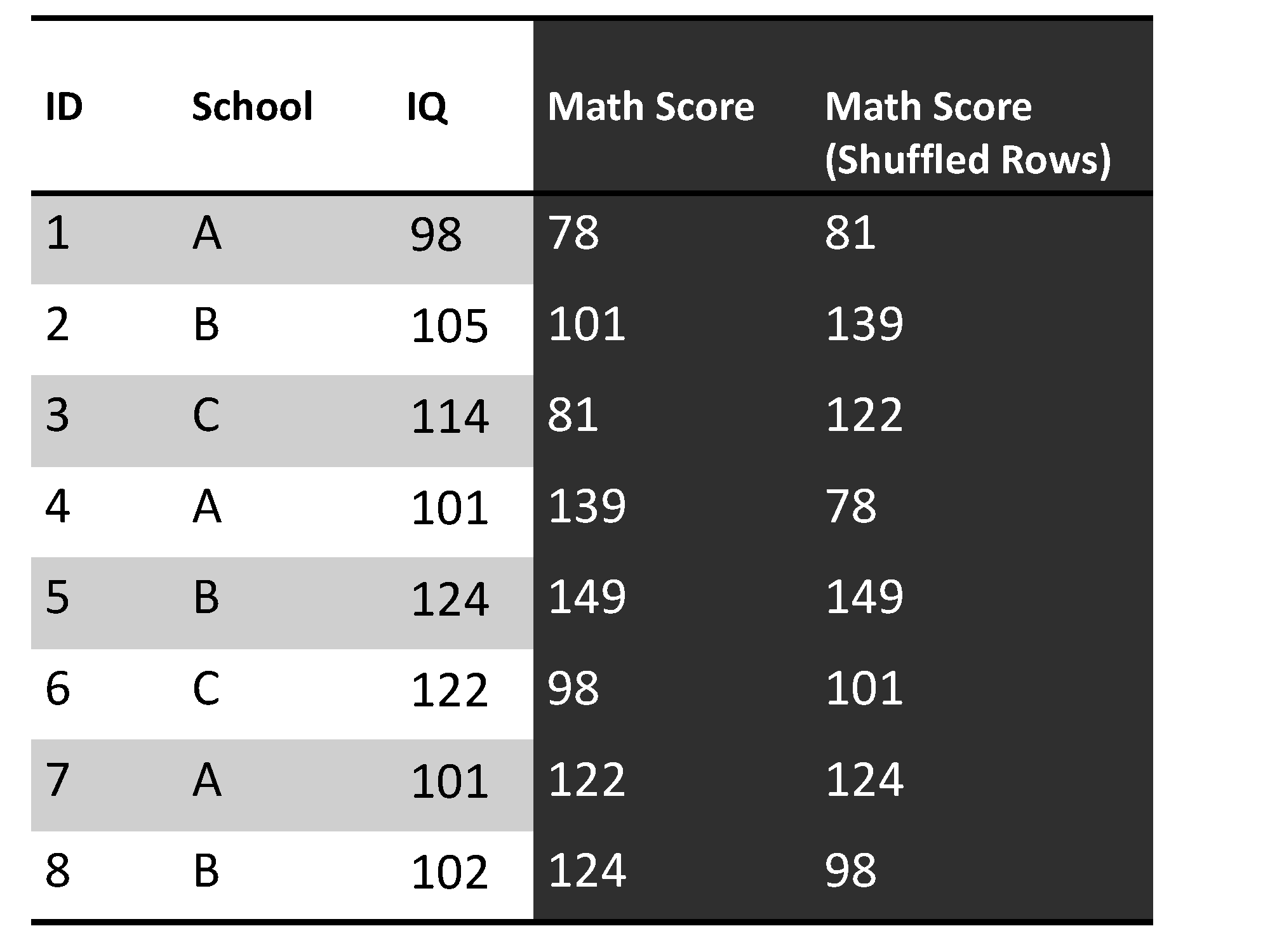

Thus, we argue that in a regression design the best method of blinding is to shuffle the criterion variable Y and leave the predictors intact (see Figure 1). Any correlation with a shuffled variable is thus based on chance, which breaks the results–analysis feedback loop. When the design is bivariate, it does not matter which variable is shuffled. However, in the case of a multiple regression, it is preferable to shuffle the criterion variable, so that eventual collinearity of the predictors stays intact and can be accounted for.

Figure 1. Analysis blinding in a regression design is illustrated using a fictitious example featuring data of students from different schools who performed a math test. For each of the students, an estimate of their IQ is also available. Blinding can be achieved when the data manager shuffles the dependent variable

Conclusion

The presented article advocates analysis blinding and proposes to add it to the toolbox of improved practices and standards that are currently revolutionizing psychology. We believe that the blinding of analyses is an intuitive procedure that can be achieved in almost any study. The method is of particular interest for preregistered studies, because blinding keeps intact the virtue of true confirmatory hypothesis testing, while offering the flexibility that an analyst needs to account for peculiarities of a data set. Nonetheless, for studies that are not preregistered, blinding analyses can substantially improve the rigor and reliability of experimental findings. Blinding allows a sharp distinction between exploratory and confirmatory analyses, while allowing the analyst almost complete flexibility in selecting appropriate statistical models.

References

Chambers, C. D. (2017). The seven deadly sins of psychology: A manifesto for reforming the culture of scientific practice. Princeton: Princeton University Press.

Conley, A., Goldhaber, G., Wang, L., Aldering, G., Amanullah, R., Commins, E. D., . . . The Supernova Cosmology Project (2006). Measurement of ⍵m, ⍵λ from a blind analysis of Type Ia supernovae with CMAGIC: Using color information to verify the acceleration of the Universe. The Astrophysical Journal, 644 , 1–20.

De Groot, A. D. (1956/2014). The meaning of “significance” for different types of research. Translated and annotated by Eric-Jan Wagenmakers, Denny Borsboom, Josine Verhagen, Rogier Kievit, Marjan Bakker, Angelique Cramer, Dora Matzke, Don Mellenbergh, and Han L. J. van der Maas. Acta Psychologica, 148 , 188–194.

De Groot, A. D. (1969). Methodology: Foundations of inference and research in the behavioral sciences. The Hague: Mouton.

Feynman, R. (1998). The meaning of it all: Thoughts of a citizen–scientist. Reading, MA: Perseus Books.

Gelman, A., & Loken, E. (2014). The statistical crisis in science. American Scientist, 102 , 460–465.

Goldacre, B. (2009). Bad science. London: Fourth Estate.

Heinrich, J. G. (2003). Benefits of blind analysis techniques. Unpublished manuscript. Retrieved from https://www-cdf.fnal.gov/physics/statistics/notes/cdf6576_blind.pdf

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2 , 696–701.

Klein, J. R., & Roodman, A. (2005). Blind analysis in nuclear and particle physics. Annual Review of Nuclear and Particle Science, 55 , 141–163.

MacCoun, R., & Perlmutter, S. (2015). Hide results to seek the truth. Nature, 526 , 187–189.

MacCoun, R., & Perlmutter, S. (2017). Blind analysis as a correction for confirmatory bias in physics and in psychology. In S. O. Lilienfeld & I. Waldman (Eds.), Psychological science under scrutiny: Recent challenges and proposed solutions (pp. 297–321). John Wiley and Sons.

Munafò, M. R., Nosek, B. A., Bishop, D. V. M., Button, K. S., Chambers, C. D., Percie du Sert, N., . . . Ioannidis, J. P. A. (2017). A manifesto for reproducible science. Nature Human Behaviour, 1 , 0021.

Peirce, C. S. (1878). Deduction, induction, and hypothesis. Popular Science Monthly, 13 , 470–482.

Peirce, C. S. (1883). A theory of probable inference. In C. S. Peirce (Ed.), Studies in logic (pp. 126–181). Boston: Little & Brown.

Robertson, C. T., & Kesselheim, A. S. (2016). Blinding as a solution to bias: Strengthening biomedical science, forensic science, and law. Amsterdam: Academic Press.

Wagenmakers, E.-J., Wetzels, R., Borsboom, D., van der Maas, H. L. J., & Kievit, R. A. (2012). An agenda for purely confirmatory research. Perspectives on Psychological Science, 7 , 627–633.

About The Authors

Gilles Dutilh

Gilles is a statistician at the Clinical Trial Unit of the University Hospital in Basel, Switzerland.

Alexandra Sarafoglou

Alexandra Sarafoglou is a PhD candidate at the Psychological Methods Group at the University of Amsterdam.

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.