“Bayesian Thinking for Toddlers” is a children’s book that attempts to explain the core of Bayesian inference. I believe that core to be twofold. The first key notion is that of probability as a degree of belief, intensity of conviction, quantification of knowledge, assessment of plausibility, however you want to call it — just as long as it is not some sort of frequency limit (the problems with the frequency definition are summarized in Jeffreys, 1961, Chapter 7). The second key notion is that of learning from predictive performance: accounts that predict the data relatively well increase in plausibility, whereas accounts that predict the data relatively poorly suffer a decline.

“Bayesian Thinking for Toddlers” (BTT) features a cover story with a princess, dinosaurs, and cookies. The simplicity of the cover story will not prevent the critical reader from raising an eyebrow at several points throughout the text. The purpose of this post is to explain what is going on in BTT under the hood.

Why 14 cookies?

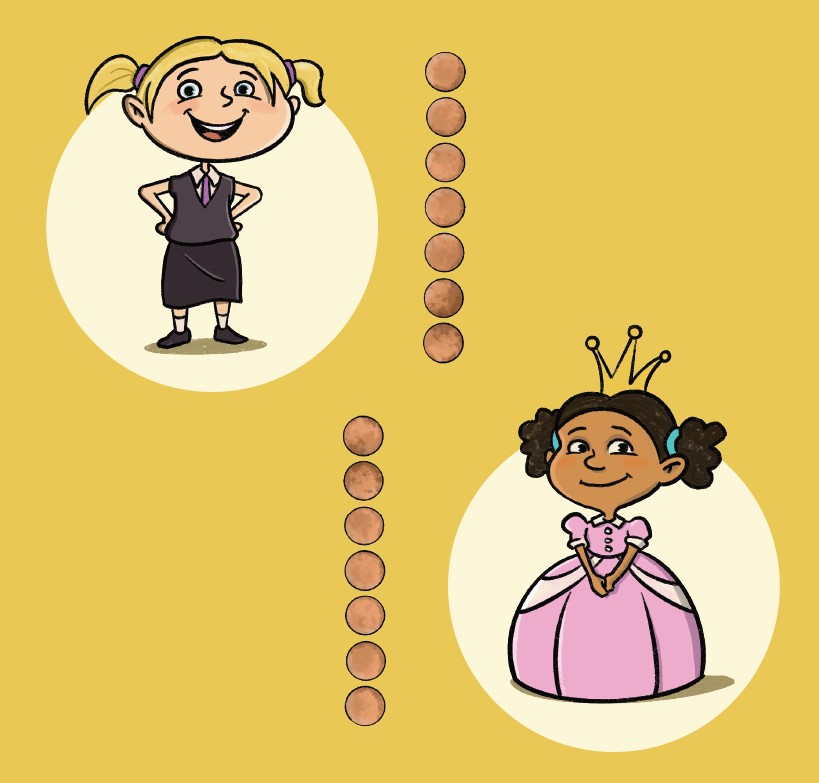

BTT features two girls –Kate and Miruna– who each believe they know the most about dinosaurs. Their aunt Agatha has baked 14 cookies for the girl who knows the most about dinosaurs. The cookies represent aunt Agatha’s conviction that each girl knows the most about dinosaurs. At the start, Agatha knows nothing about their relative ability and therefore intends to divide the cookies evenly:

The division of cookies represents aunt Agatha’s prior distribution. It could also have been shown as a bar chart (with two bars, each one going up to .50), but that would have been boring.

Why 14 cookies? Well, it needs to be an even number, or else dividing them evenly means we have to start by splitting one of the cookies in two, which is inelegant. As we will see later, Kate and Miruna engage in a competition that requires successive adjustments to aunt Agatha’s prior distribution. With a reasonable number of cookies I was unable to prevent cookies from being split, but among the choices available I felt that 14 was the best compromise. Of course the number itself is arbitrary; armed with a keen eye and a sharp knife (and a large cookie), aunt Agatha could start with a single cookie and divide it up in proportion to her confidence that Kate (or Miruna) knows the most about dinosaurs.

Do the Girls Violate Cromwell’s Rule?

To figure out which girl knows the most about dinosaurs, aunt Agatha asks two questions: “how many horns does a Triceratops have on its head?” and “how many spikes does a Stegosaurus have on its tail?”. For the Triceratops question, Kate knows the correct answer with 100% certainty:

Similarly, for the Stegosaurus question, Miruna knows the answer with 100% certainty:

This is potentially problematic. What if, for some question, both girls are 100% confident but their answers are both incorrect? Epistemically, both would go bust, even though Kate may have outperformed Miruna on the other questions. This conundrum results from the girls not appreciating that they can never be 100% confident about anything; in other words, they have violated “Cromwell’s rule” (an argument could be made for calling it “Carneades’ rule”, but we won’t go into that here). In a real forecasting competition, it is generally unwise to stake your entire fortune on a single outcome — getting it wrong means going bankrupt. I made the girls state their knowledge with certainty in order to simplify the exposition; critical readers may view these statements as an approximation to a state of very high certainty (instead of absolute certainty).

Observed Data Only

What if, for the Triceratops question, Kate and Miruna would have assigned the following probabilities to the different outcomes:

| #Horns | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| Kate | .10 | .20 | .20 | .40 | .10 | 0 | 0 | 0 | 0 |

| Miruna | 0 | 0 | 0 | .40 | 0 | 0 | 0 | 0 | .60 |

Both Kate and Miruna assign the same probability (.40) to the correct answer, so the standard treatment would say that we should not change our beliefs about who knows more about dinosaurs — Kate and Miruna performed equally well. Nevertheless, Miruna assigned a probability of .60 to the ridiculous proposition that Triceratops have 8 horns, and probability 0 to the much more plausible proposition that they have two horns. It seems that Miruna knows much less about dinosaurs than Kate. What is going on? Note that if these probabilities were bets, the bookie would pay Kate and Miruna exactly the same — whether money was wasted on highly likely or highly unlikely outcomes is irrelevant when those outcomes did not occur. Similarly, Phil Dawid’s prequential principle (e.g., Dawid, 1984, 1985, 1991) states that the adequacy of a sequential forecasting system ought to depend only on the quality of the forecasts made for the observed data. However, some people may still feel that based on the predictions issued most cookies should go to Kate rather than Miruna.

After considering the issue some more, I think that (as if often the case) the solution rests in the prior probability that is assigned to Kate vs Miruna. The predictions from Miruna are so strange that this greatly reduces our a priori trust in her knowing much about dinosaurs: our background information tells us, even before learning the answer, that we should be skeptical about Miruna’s level of knowledge. The data itself, however, do not alter our initial skepticism.

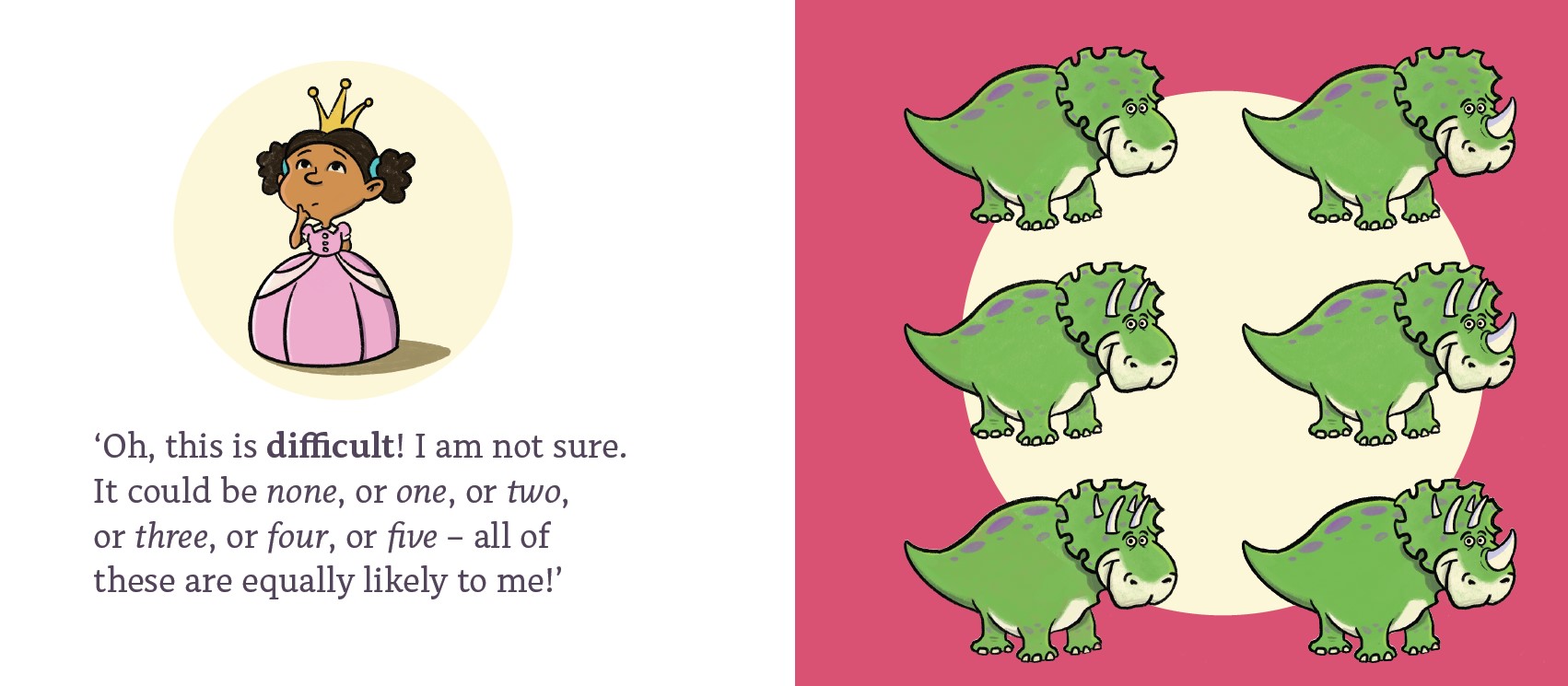

Vague Knowledge or No Knowledge?

Miruna gives a very vague answer to the Triceratops question:

The assumption that Miruna deemed the different options equally likely was made for convenience. Miruna effectively specified a uniform distribution across the number of horns. The son of a friend of mine remarked that Miruna simply did not know the answer at all. This is not exactly true, of course: the options involving more than five horns were ruled out. Many philosophers have debated the question of whether or not a uniform distribution across the different options reflects ignorance. The issue is not trivial; for instance, a uniform distribution from 0 to 1000 horns would reflect the strong conviction that the number of horns is greater than 10 — this appears a rather strong prior commitment. At any rate, what matters is that Miruna considers the different options to be equally likely a priori.

Where is the Sampling Variability?

In this example there is no sampling variability. There is a true state of nature, and Kate and Miruna are judged on their ability to predict it. This is conceptually related to the Bayesian analysis of the decimal expansion of irrational numbers (e.g., Gronau & Wagenmakers, 2018).

How Are the Girls Making a Prediction?

In the discussion of the results, I claim that Kate and Miruna are judged on their ability to predict the correct answer. This seems strange. The last dinosaurs walked the earth millions of years ago, so in what sense is the answer from Kate and Miruna “prediction”?

Those who are uncomfortable with the word “predict” may replace it with “retrodict”. What matters is that the answers from Kate and Miruna can be used to fairly evaluate the quality of their present knowledge. Suppose I wish to compare the performance of two weatherpersons. In scenario A, the forecasters are given information about today’s weather and make a probabilistic forecast for the next day. We continue this process until we are confident which forecaster is better. In scenario B, we first lock both forecasters up in an atomic bunker and deprive them of modern methods of communication with the outside world. After one week has passed, we provide the forecasters with the weather seven days ago and let them make “predictions” for the next day, that is, the weather from six days ago. Because this weather has already happened, these are retrodictions rather than predictions. Nevertheless, the distinction is only superficial: it does not affect our ability to assess the quality of the forecasters (provided of course that they have access to the same information in scenario A vs B). The temporal aspect is irrelevant — all that matters is the relation between one’s state of knowledge and the data that needs to be accounted for.

For those who cannot get over their intuitive dislike of retrodictions, one may imagine a time machine and rephrase Agatha’s question as “Suppose you enter the time machine and travel back to the time of the dinosaurs. If you step out and see a Triceratops, how many horns would it have?” (more likely prediction scenarios involve the display of dinosaurs in books of a museum).

Where Are the Parameters?

The standard introduction to Bayesian inference involves a continuous prior distribution (usually a uniform distribution from 0 to 1) that is updated to a continuous posterior distribution. The problem is that this is not at all a trivial operation: it involves integration and the concept of infinity. In BIT we just have two discrete hypotheses: Kate knows the most about dinosaurs or Miruna knows the most about dinosaurs, and the fair distribution of cookies reflects Agatha’s relative confidence in these two possibilities. Kate and Miruna themselves may have complicated models of the world, and these may be updated as a result of learning the answers to the questions. That is fine, but for aunt Agatha (and for the reader) this complication is irrelevant: all that matters is the assessment of knowledge through “predictive” (retrodictive) success.

Assumption of the True Model

Some statisticians argue that Bayes’ rule depends on the true model being in the set of candidate models (i.e., the M-closed setup, to be distinguished from the M-open setup). I have never understood the grounds for this. Bayes’ rule itself does not commit to any kind of realism, and those who promoted Bayesian learning (e.g., Jeffreys and de Finetti) strongly believed that models are only ever an approximation to reality. Dawid’s prequential principle is also quite explicitly concerned with the assessment of predictive performance, without any commitment on whether the forecaster is “correct” in some absolute sense. From the forecasting perspective it is even strange to speak of a forecaster being “true”; a forecaster simply forecasts, and Bayes’s rule is the way in which incoming data coherently update the forecaster’s knowledge base. In BTT, Kate and Miruna can be viewed as forecasters or as statistical models — it does not matter for their evaluation, and nor does it matter whether or not we view the “models” as potentially correct.

Where Are the Prior and Posterior Distributions

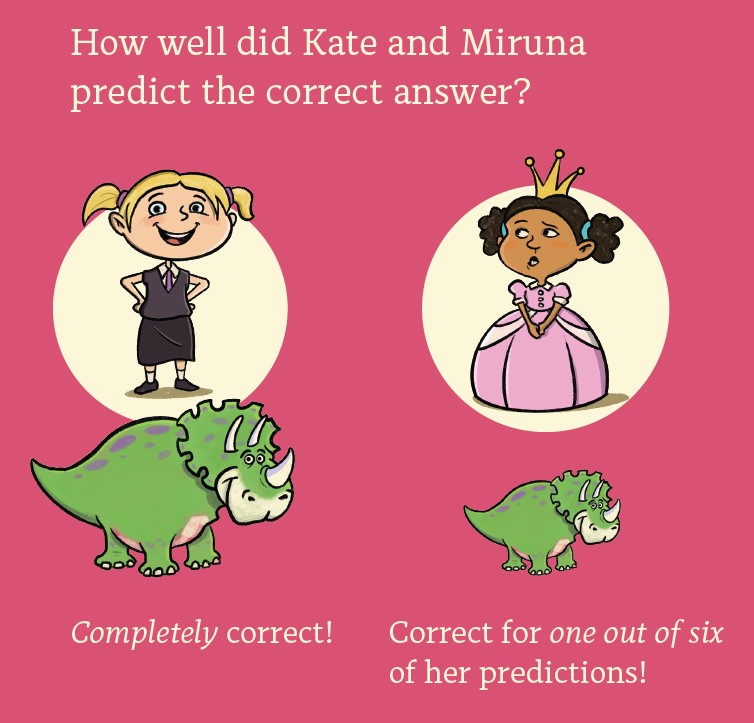

They are represented by the division of cookies; the prior distribution is 1/2 – 1/2 at the start, then updated to a 6/7 – 1/7 posterior distribution after the Triceratops question, and finally to a 3/4 – 1/4 posterior distribution after the Stegosaurus question.

How Did You Get the Numbers?

At the start, Agatha assigns Kate and Miruna a probability of 1/2, meaning that the prior odds equals 1. For the Triceratops question Kate outpredicts Minura by a factor of 6. Thus, the posterior odds is 1 x 6 = 6, and the corresponding posterior probability is 6/(6+1) = 6/7, leaving 1/7 for Miruna. For the Stegosaurus question, the Bayes factor is 2 in favor of Miruna (so 1/2 in favor of Kate); the posterior odds is updated to 6 x 1/2 = 3 for Kate, resulting in a posterior probability of 3/(3+1) = 3/4 for Kate, leaving 1/4 for Miruna. At each stage, the division of cookies reflects Agatha’s posterior probability for Kate vs. Miruna knowing the most about dinosaurs.

What About Ockham’s Razor?

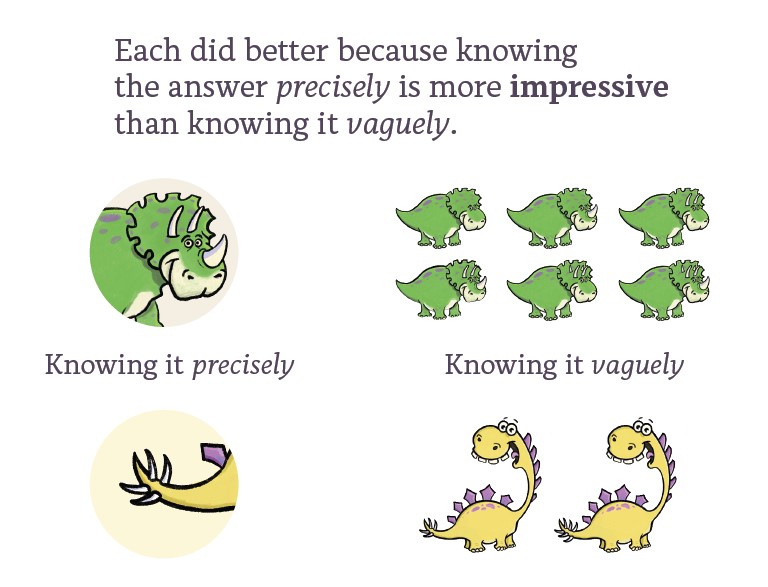

In Bayes factor model comparison, simple models are preferred because they make precise predictions; when the data are in line with these precise predictions, these simple models outperform more complex rivals that are forced to spread out their predictions across the data space. This is again similar to betting — when you hedge your bets you will not gain as much as someone who puts most eggs in a single basket (presuming the risky bet turns out to be correct):

Concluding Comments

It is interesting how many statistical concepts are hidden beneath a simple story about cookies and dinosaurs. Please let me know if there is anything else you would like me to clarify.

References

Dawid, A. P. (1984). Present position and potential developments: Some personal views: Statistical theory: The prequential approach (with discussion). Journal of the Royal Statistical Society Series A, 147, 278-292.

Dawid, A. P. (1985). Calibration-based empirical probability. The Annals of Statistics, 13, 1251-1273.

Dawid, A. P. (1991). Fisherian inference in likelihood and prequential frames of reference. Journal of the Royal Statistical Society B, 53, 79-109.

Jeffreys, H. (1961). Theory of probability (3rd ed.). Oxford: Oxford University Press.

Gronau, Q. F., & Wagenmakers, E.-J. (2018). Bayesian evidence accumulation in experimental mathematics: A case study of four irrational numbers. Experimental Mathematics, 27, 277-286. Open access.

Wagenmakers, E.-J. (2020). Bayesian thinking for toddlers. A Dutch version (“Bayesiaans Denken voor Peuters“) is here, and a German version (“Bayesianisches Denken mit Dinosauriern“). An interview is here.

About The Authors

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.