This post is a synopsis of the Bayesian work featured in Landy et al. (in press). Crowdsourcing hypothesis tests: Making transparent how design choices shape research results. Psychological Bulletin. Preprint available at https://osf.io/fgepx/; the 325-page supplement is available at https://osf.io/jm9zh/; the Bayesian analyses can be found on pp. 238-295.

Abstract

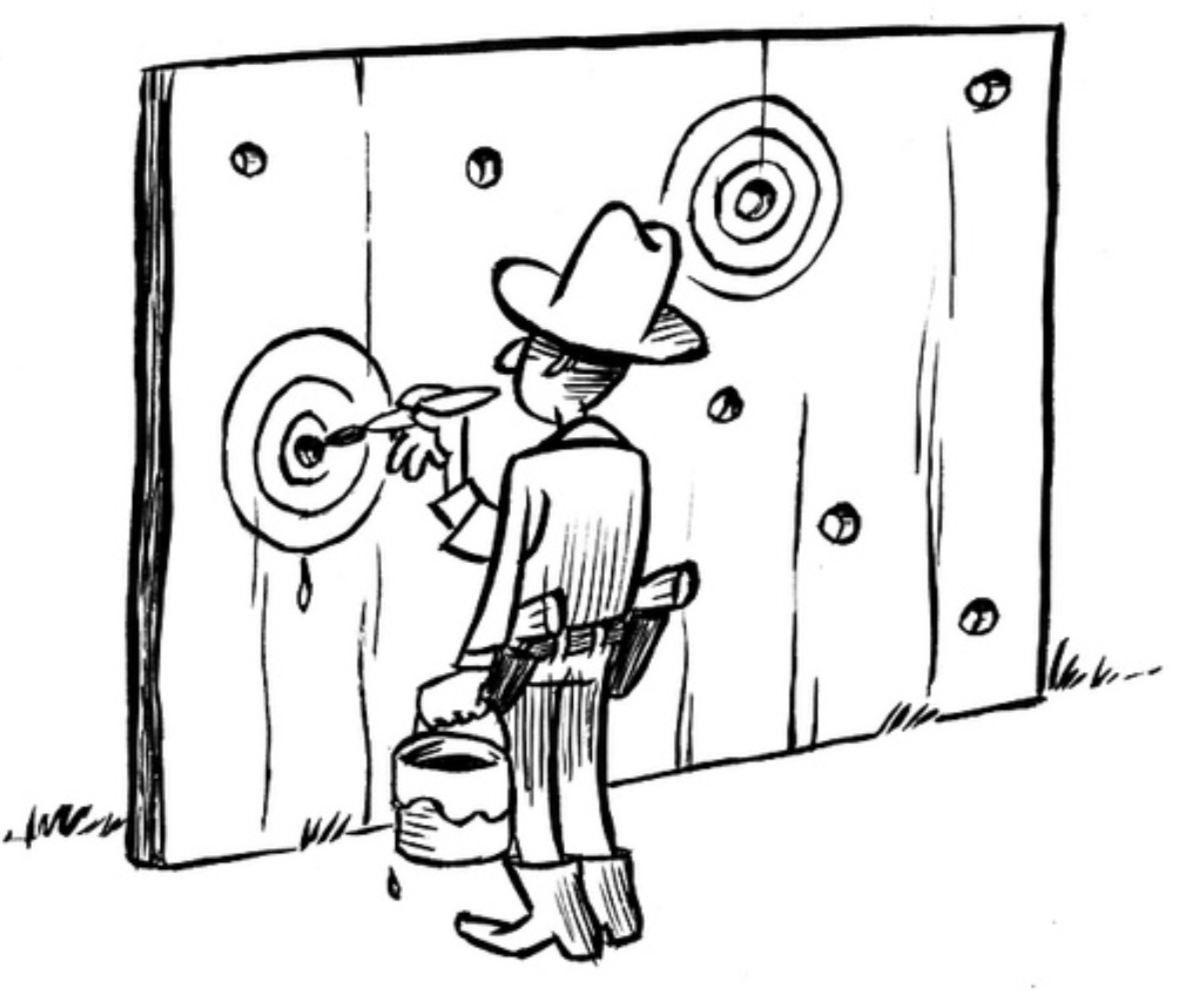

“To what extent are research results influenced by subjective decisions that scientists make as they design studies? Fifteen research teams independently designed studies to answer five original research questions related to moral judgments, negotiations, and implicit cognition. Participants from two separate large samples (total N > 15,000) were then randomly assigned to complete one version of each study. Effect sizes varied dramatically across different sets of materials designed to test the same hypothesis: materials from different teams rendered statistically significant effects in opposite directions for four out of five hypotheses, with the narrowest range in estimates being d = -0.37 to +0.26. Meta-analysis and a Bayesian perspective on the results revealed overall support for two hypotheses, and a lack of support for three hypotheses. Overall, practically none of the variability in effect sizes was attributable to the skill of the research team in designing materials, while considerable variability was attributable to the hypothesis being tested. In a forecasting survey, predictions of other scientists were significantly correlated with study results, both across and within hypotheses. Crowdsourced testing of research hypotheses helps reveal the true consistency of empirical support for a scientific claim.”

The Bayesian Perspective

The Bayesian analyses were designed and conducted by Quentin Gronau, Alexander Ly, Don van den Bergh, Maarten Marsman, Koen Derks, and myself. The article summarizes the results as follows:

“Supplement 7 provides an extended report of Bayesian analyses of the overall project results (the pre-registered analysis plan is available at https://osf.io/9jzy4/). To summarize briefly, the Bayesian analyses find compelling evidence in favor of Hypotheses 2 and 3, moderate evidence against Hypothesis 1 and 4, and strong evidence against Hypothesis 5. Overall, two of five original hypotheses were confirmed aggregating across the different study designs. This pattern is generally consistent with the frequentist analyses reported above, with the exception that the frequentist approach suggests a very small but statistically significant (p < .05) effect in the direction predicted by Hypothesis 5 after aggregating across the different study designs, whereas the Bayesian analyses find strong evidence against this prediction. The project coordinators, original authors who initially proposed Hypothesis 5, as well as further authors on this article concur with the Bayesian analyses that the effect is not empirically supported by the crowdsourcing hypotheses tests project, due to the small estimate of the effect, and heterogeneity across designs. Regarding the main meta-scientific focus of this initiative, namely variability in results due to researcher choices, for all five hypotheses strong evidence of heterogeneity across different study designs emerged in the Bayesian analyses.”

The article supplement provides the Bayesian details on pp. 238-295. I personally like the use of informed prior distributions and model averaging.

References

Landy, J. F., Jia, M., Ding, I. L., Viganola, D., Tierney, W., Dreber, A., Johannesson, M., Pfeiffer, T., Ebersole, C. R., Gronau, Q. F., Ly, A., van den Bergh, D., Marsman, M., Derks, K., Wagenmakers, E.-J., Proctor, A., Bartels, D. M., Bauman, C. W., Brady, W. J., Cheung, F., Cimpian, A., Dohle, S., Donnellan, M. B., Hahn, A., Hall, M. P., Jiménez-Leal, W., Johnson, D. J., Lucas, R. E., Monin, B., Montealegre, A., Mullen, E., Pang, J., Ray, J., Reinero, D. A., Reynolds, J., Sowden, W., Storage, D., Su, R., Tworek, C. M., Van Bavel, J. J., Walco, D., Wills, J., Xu, X., Yam, K. C., Yang, X., Cunningham, W. A., Schweinsberg, M., Urwitz, M., The Crowdsourcing Hypothesis Tests Collaboration, & Uhlmann, E. L. (in press). Crowdsourcing hypothesis tests: Making transparent how design choices shape research results

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.