This post is an extended synopsis of two papers: The MARP Team (2022). A Many-Analysts Approach to the Relation Between Religiosity and Well-being. Preprint available on PsyArXiv: https://psyarxiv.com/pbfye/. Sarafoglou, Hoogeveen & Wagenmakers (2022). Comparing Analysis Blinding With Preregistration In The Many-Analysts Religion Project. Preprint available on PsyArXiv: https://psyarxiv.com/6dn8f/.

Summary

The relation between religiosity and well-being is one of the most researched topics in the psychology of religion, yet the directionality and robustness of the effect remains debated. Here, we adopted a many-analysts approach to assess the robustness of this relation based on a new cross-cultural dataset (N = 10,535 participants from 24 countries). We recruited 120 analysis teams, from whom (n = 61) preregistered their analysis and (n = 59) used analysis blinding. Teams investigated (1) whether religious people self-report higher well-being, and (2) whether the relation between religiosity and self-reported well-being depends on perceived cultural norms of religion (i.e., whether it is considered normal and desirable to be religious in a given country). In a two-stage procedure, the teams first created an analysis plan and then executed their planned analysis on the data. For the first research question, all but 3 teams reported positive effect sizes with credible/confidence intervals excluding zero (median reported beta = 0.120). For the second research question, this was the case for 65% of the teams (median reported beta = 0.039).

Furthermore, we compared the reported efficiency and convenience of preregistration and analysis blinding. Analysis blinding ties in with current methodological reforms for more transparency since it safeguards the confirmatory status of the analyses while simultaneously allowing researchers to explore peculiarities of the data and account for them in their analysis plan. Our results showed that analysis blinding and preregistration imply approximately the same amount of work but that in addition, analysis blinding reduced deviations from analysis plans. As such, analysis blinding constitutes an important addition to the toolbox of effective methodological reforms to combat the crisis of confidence.

Many-analysts approach

In the current project, we aimed to shed light on the association between religion and well-being and the extent to which different theoretically- or methodologically-motivated analytic choices affect the results. To this end, we initiated a many-analysts project, in which several independent analysis teams analyze the same dataset in order to answer a specific research question (e.g., Bastiaansen et al., 2020; Boehm et al., 2018; Botvinik-Nezer et al., 2020; Silberzahn & Uhlmann, 2015; van Dongen et al., 2019).

We believe that our project involves a combination of elements that extend existing many-analysts work. First, we collected new data for this project with the aim to provide new evidence for the research questions of interest, as opposed to using an existing dataset that has been analyzed before. Second, we targeted both researchers interested in methodology and open science, as well as researchers from the field of the scientific study of religion and health to encourage both methodologically sound and theoretically relevant decisions. Third, in comparison to previous many-analysts projects in psychology, the current project included a lot of teams (i.e., 120 teams). Fourth, we applied a two-step procedure that ensured a purely confirmatory status of the analyses: in stage 1, all teams first either completed a preregistration or specified an analysis pipeline based on a blinded version of the data. After submitting the plan to the OSF, teams received the real data and executed their planned analyses in stage 2. Fifth, the many-analysts approach itself was preregistered prior to cross-cultural data collection (see https://osf.io/xg8y5).

Highlights

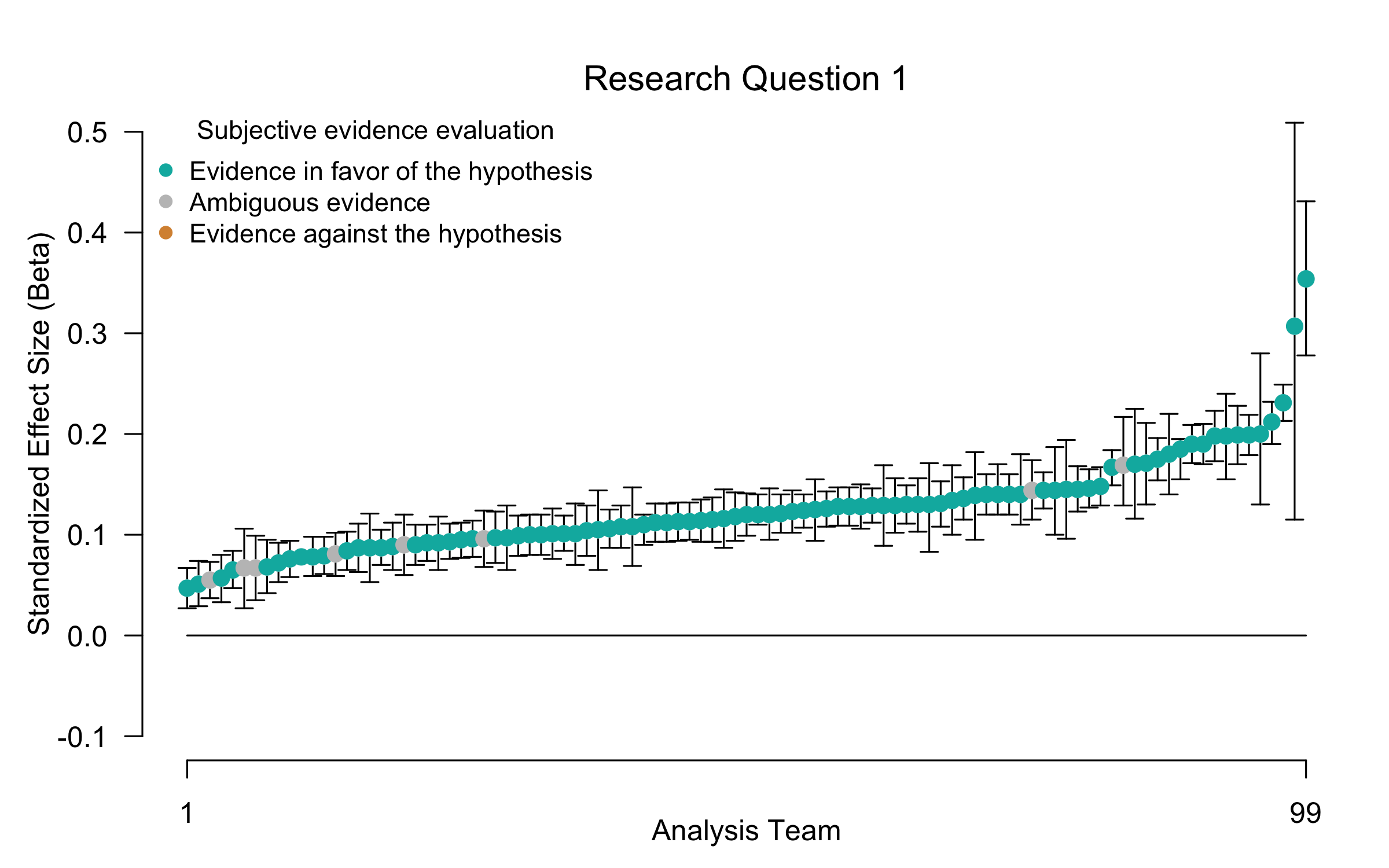

Research Question 1: “Do religious people self-report higher well-being?”

We were able to extract 99 beta coefficients from the results provided by the 120 teams that completed stage 2. As shown in Figure 1, the results are remarkably consistent: all 99 teams reported a positive beta value, and for all teams the 95% confidence/credible interval excludes zero. The median reported beta is 0.120 and the median absolute deviation is 0.036. Furthermore, 88% of the teams concluded that there is good evidence for a positive relation between religiosity and self-reported well-being. Notably, although the teams were almost unanimous in their evaluation of research question 1, only eight of the 99 teams reported combinations of effect sizes and confidence/credibility intervals that matched those from another team (i.e., four effect sizes were reported twice).

Do note that in contrast to the unanimity in results based on the beta coefficients, out of the 21 teams for whom a beta coefficient could not be calculated, 3 teams reported evidence against the relation between religiosity and well-being: 2 teams used machine learning and found that none of the religiosity items contributed substantially to predicting well-being and 1 team used multilevel modeling and reported unstandardized gamma-weights for within- and between-country effects of religiosity whose confidence intervals included zero (see the Online Appendix).

Figure 1: Beta coefficients for the effect of religiosity on self-reported well-being (research question 1) with 95% confidence or credible intervals. Green/blue points indicate effect sizes of teams that subjectively concluded that there is good evidence for a positive relation between individual religiosity and self-reported well-being, grey points indicate effect sizes of teams that subjectively concluded that the evidence is ambiguous, and brown/orange points indicate effect sizes of teams that subjectively concluded that there is good evidence against a positive relation between individual religiosity and self-reported well-being. The betas are ordered from smallest to largest.

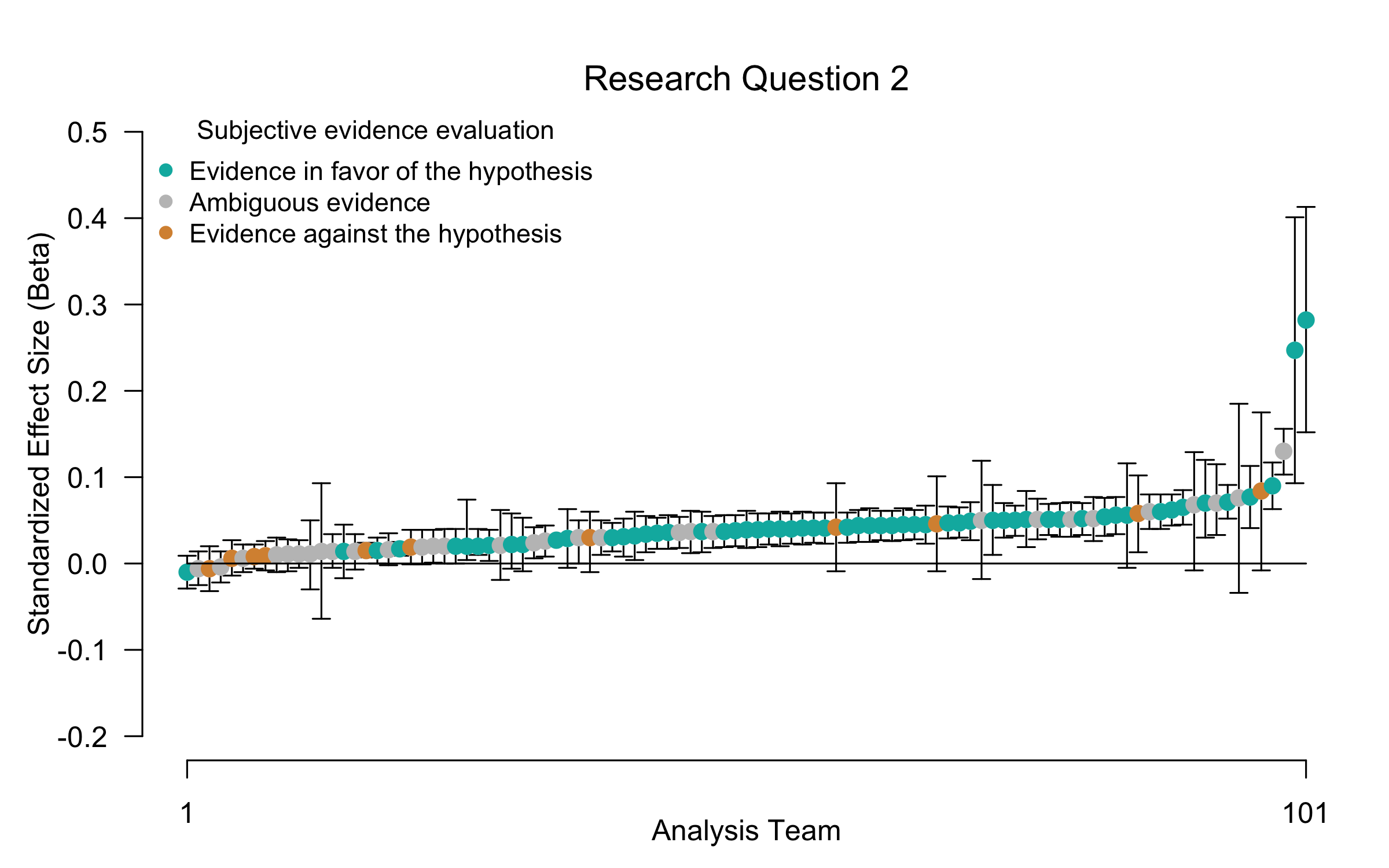

Research Question 2: “Does the relation between religiosity and self-reported well-being depend on perceived cultural norms of religion?”

Out of the 120 teams who completed stage 2 we were able to extract 101 beta coefficients for research question 2. As shown in Figure 2 the results for research question 2 are more variable than for research question 1; 97 out of 101 teams reported a positive beta value and for 66 teams (65%) the confidence/credible interval excluded zero. The median reported effect size is 0.039 and the median absolute deviation is 0.022. Furthermore, 54% of the teams concluded that there is good evidence for an effect of cultural norms on the relation between religiosity and self-reported well-being. Again, most reported effect sizes were unique; only 3 out of the 101 reported combination of effect size and confidence/credible intervals appeared twice.  Figure 2: Beta coefficients for the effect of cultural norms of the relation between religiosity and self-reported well-being (research question 2) with 95% confidence or credible intervals. Green/blue points indicate effect sizes of teams that subjectively concluded that there is good evidence for the hypothesis that the relation between individual religiosity and self-reported well-being depends on the perceived cultural norms of religion, grey points indicate effect sizes of teams that subjectively concluded that the evidence is ambiguous, and brown/orange points indicate effect sizes of teams that subjectively concluded that there is good evidence against the hypothesis that the relation between individual religiosity and self-reported well-being depends on the perceived cultural norms of religion. The betas are ordered from smallest to largest.

Figure 2: Beta coefficients for the effect of cultural norms of the relation between religiosity and self-reported well-being (research question 2) with 95% confidence or credible intervals. Green/blue points indicate effect sizes of teams that subjectively concluded that there is good evidence for the hypothesis that the relation between individual religiosity and self-reported well-being depends on the perceived cultural norms of religion, grey points indicate effect sizes of teams that subjectively concluded that the evidence is ambiguous, and brown/orange points indicate effect sizes of teams that subjectively concluded that there is good evidence against the hypothesis that the relation between individual religiosity and self-reported well-being depends on the perceived cultural norms of religion. The betas are ordered from smallest to largest.

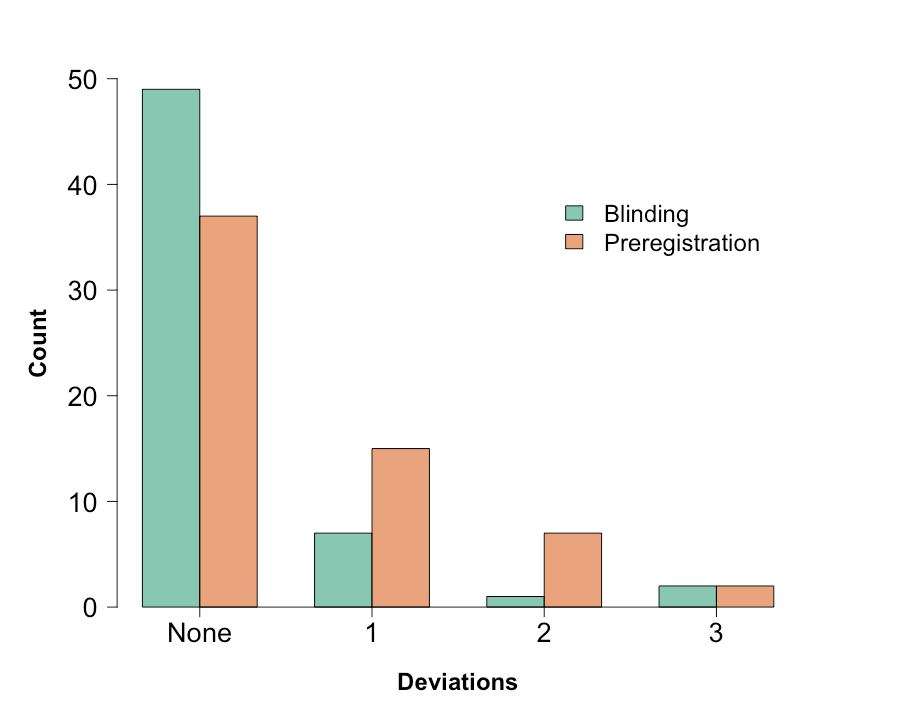

Analysis Blinding versus Preregistration

Figure 3 displays the reported deviations for teams who preregistered their analyses and teams who did analysis blinding. Our results concerning the comparison between preregistration and analysis blinding provide strong evidence (BF = 11.40) for the hypothesis that analysis blinding leads to fewer deviations from the analysis plan and if teams deviated they did so on fewer aspects. Teams in the analysis blinding condition better anticipated their final analysis strategies, particularly with respect to exclusion criteria and operationalization of the independent variable. That is, from the 11 teams who deviated with respect to the exclusion criteria, 10 were in the preregistration condition. Similarly, from the 9 teams who deviated with respect to the independent variable, 8 were in the preregistration condition. The estimated probability that a team would deviate from their analysis plan was almost twice as high for for teams who preregistered (i.e., 38%) compared to team who did analysis blinding (i.e., 20%). We conclude that analysis blinding does not mean less work, but researchers can still benefit from the method since they can plan more appropriate analyses from which they deviate less frequently.

Figure 3: Reported deviations from planned analysis per condition. The green bars represent teams in the analysis blinding condition, the orange bars represent teams in the preregistration condition. More teams in the analysis blinding condition reported no deviations from their planned analysis and if they had deviated, they did so on less aspects than teams in the preregistration condition.

Figure 3: Reported deviations from planned analysis per condition. The green bars represent teams in the analysis blinding condition, the orange bars represent teams in the preregistration condition. More teams in the analysis blinding condition reported no deviations from their planned analysis and if they had deviated, they did so on less aspects than teams in the preregistration condition.

References

Bastiaansen, J. A., Kunkels, Y. K., Blaauw, F. J., Boker, S. M., Ceulemans, E., Chen, M., Chow, S.-M., de Jonge, P., Emerencia, A. C., Epskamp, S., Fisher, A. J., Hamaker, E. L., Kuppens, P., Lutz, W., Meyer, M. J., Moulder, R., Oravecz, Z., Riese, H., Rubel, J., … Bringmann, L. F. (2020). Time to get personal? The impact of researchers’ choices on the selection of treatment targets using the experience sampling methodology. Journal of Psychosomatic Research, 137, 110211. https://doi.org/10.1016/j.jpsychores.2020.110211

Boehm, U., Annis, J., Frank, M. J., Hawkins, G. E., Heathcote, A., Kellen, D., Krypotos, A.-M., Lerche, V., Logan, G. D., Palmeri, T. J., van Ravenzwaaij, D., Servant, M., Singmann, H., Starns, J. J., Voss, A., Wiecki, T. V., Matzke, D., & Wagenmakers, E.-J. (2018). Estimating across-trial variability parameters of the Diffusion Decision Model: Expert advice and recommendations. Journal of Mathematical Psychology, 87, 46–75. https://doi.org/10.1016/j.jmp.2018.09.004

Botvinik-Nezer, R., Holzmeister, F., Camerer, C. F., Dreber, A., Huber, J., Johannesson, M., Kirchler, M., Iwanir, R., Mumford, J. A., Adcock, R. A., Avesani, P., Baczkowski, B. M., Bajracharya, A., Bakst, L., Ball, S., Barilari, M., Bault, N., Beaton, D., Beitner, J., … Schonberg, T. (2020). Variability in the analysis of a single neuroimaging dataset by many teams. Nature, 582, 84–88. https://doi.org/10.1038/s41586-020-2314-9

Silberzahn, R., & Uhlmann, E. L. (2015). Many hands make tight work. Nature, 526, 189–191. van Dongen, N. N. N., van Doorn, J. B., Gronau, Q. F., van Ravenzwaaij, D., Hoekstra, R., Haucke, M. N., Lakens, D., Hennig, C., Morey, R. D., Homer, S., Gelman, A., Sprenger, J., & Wagenmakers, E.-J. (2019). Multiple perspectives on inference for two simple statistical scenarios. The American Statistician, 73, 328–339. https://doi.org/10.1080/00031305.2019.1565553

About The Authors

Suzanne Hoogeveen

Suzanne Hoogeveen is a PhD candidate at the Department of Social Psychology at the University of Amsterdam.

Alexandra Sarafoglou

Alexandra Sarafoglou is a PhD candidate at the Psychological Methods Group at the University of Amsterdam.

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.