In this sentence-by-sentence breakdown of the paper “Preregistration is Redundant, at Best”, I argue that preregistration is a pragmatic tool to combat biases that invalidate statistical inference. In a perfect world, strong theory sufficiently constrains the analysis process, and/or Bayesian robots can update beliefs based on fully reported data. In the real world, however, even astrophysicists require a firewall between the analyst and the data. Nevertheless, preregistration should not be glorified. Although I disagree with the title of the paper, I found myself agreeing with almost all of the authors’ main arguments.

Last weekend, Open Science Twitter was alive with discussions of the paper “Preregistration is redundant, at best” by Aba Szollosi, David Kellen, Danielle Navarro, Rich Shiffrin, Iris van Rooij, Trish Van Zandt, and Chris Donkin. Within a day, the paper was downloaded over 650 times. Almost immediately, Twitter turned out to be a poor medium for a nuanced discussion. Specifically, Open Science advocates (myself included) were initially irked by the paper’s provocative title and some decided that the work could be dismissed out of hand. However, not only are the authors reputable scientists, they are also my collaborators (I have co-authored 9 papers with them: 6 with Rich, 2 with Trish, 1 with Chris, and 1 with David), and some are my friends. Below I will give the paper its due attention: I’ll go over every sentence (luckily the paper is short) and look for agreement more than disagreement. The content from the paper is in italics, a conclusion is at the end. We start with the title:

“Preregistration is redundant, at best”

This is provocative, but not as provocative as the historical statements by John Stuart Mill and by John Maynard Keynes. Mill and Keynes were concerned with a more general issue, namely whether theories are more believable when they predict data well (as opposed to being constructed post-hoc to account for those data). Neither Mill nor Keynes saw much benefit in prediction. Mill argued that they only served to impress “the ignorant vulgar”, whereas Keynes called the benefits “altogether imaginary”. Of course, people such as C. S. Peirce and Adriaan de Groot argued the exact opposite, with equal gusto.

After some reflection, I decided I don’t mind the provocativeness of the title. I do assume that it signals the willingness of the authors to engage in a robust discussion; as the Dutch saying goes “he who bounces the ball should expect it back”. On a more personal note, Rich Shiffrin relentlessly teases me about my “mistake” to promote preregistration. I don’t mind this at all, but those who don’t know Rich personally may be put off by the title. For instance, both Chris Chambers and Brian Nosek (and many others as well) have spent a lot of effort to promote preregistration, and the title seems to imply that their work was less than useless.

Upon further reflection, it struck me that the provocativeness in the title may be more of a counterattack. When preregistration is hailed as the Second Coming of Christ, this implicitly degrades all other work. A famous professor (not Rich) once told me “You know, I never conducted any preregistration, and my work is pretty solid”. I understand the sentiment. In fact, whenever I read a paper with the title: “You Have Been Doing Research All Wrong: Here’s the Only Correct Way” my knee-jerk response is disbelief, if only because accepting the message implies I may be forced to revisit my old work and acquire new skills. Hence:

The First Agreement: Preregistration should not be glorified; work that is not preregistered can be excellent, and work that has been preregistered can be worthless.

“The key implication argued by proponents of preregistration is that it improves the diagnosticity of statistical tests [1]. In the strong version of this argument, preregistration does this by solving statistical problems, such as family-wise error rates. In the weak version, it nudges people to think more deeply about their theories, methods, and analyses. We argue against both: the diagnosticity of statistical tests depend entirely on how well statistical models map onto underlying theories, and so improving statistical techniques does little to improve theories when the mapping is weak. There is also little reason to expect that preregistration will spontaneously help researchers to develop better theories (and, hence, better methods and analyses).”

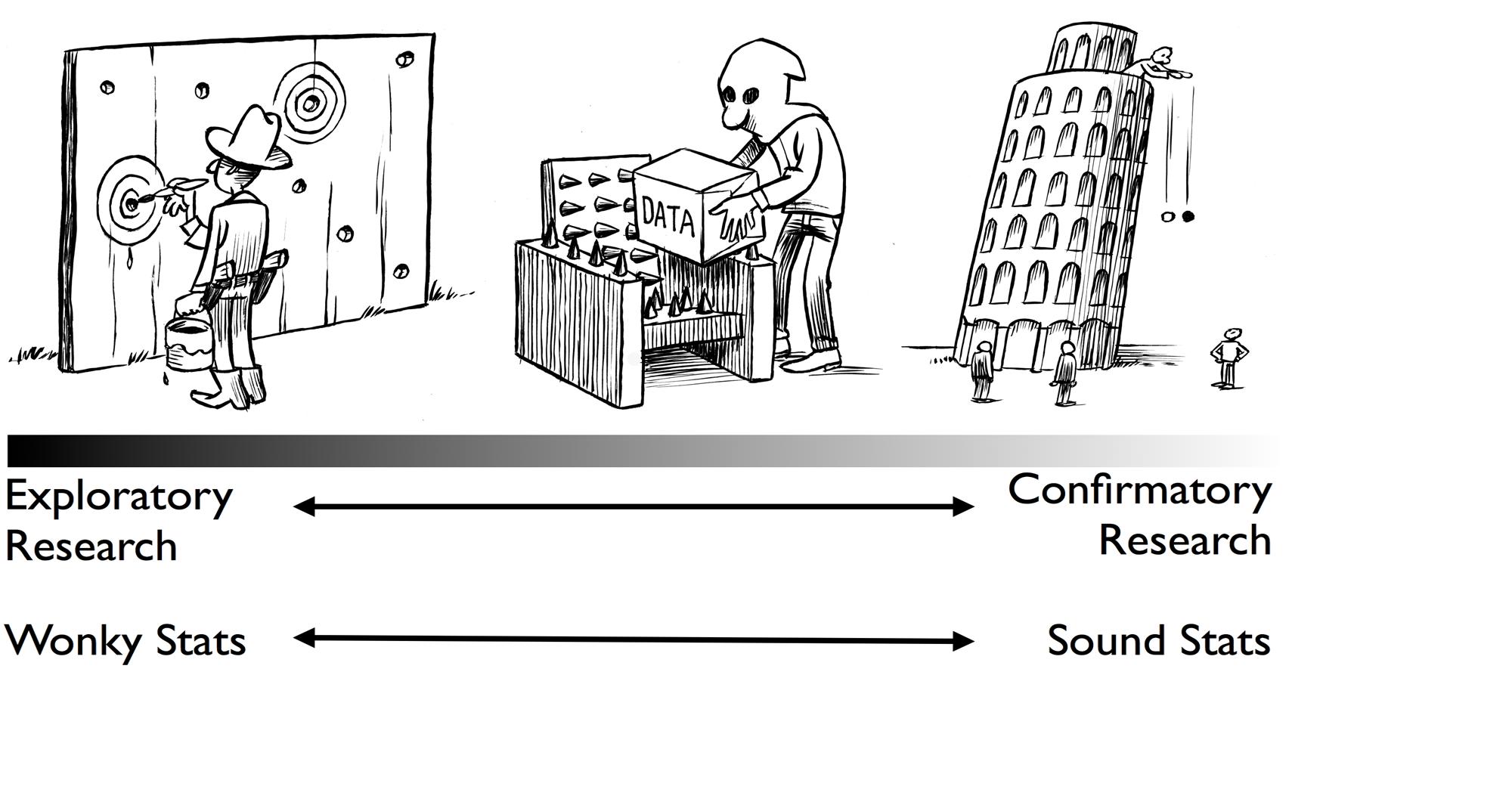

Many proponents of preregistration (e.g., Peirce, De Groot, Feynman [albeit implicitly], and Goldacre) have made a much stronger claim. In exploratory work, the data are used twice: once to discover a hypothesis, and then to test it. As is to be expected, the hypothesis generally comes out of this “test” looking pretty good. Such double use of the data is beguiling, and can be engaged in unwittingly; after all, research conducted by people, not robots, and this means that objective assessment is under perpetual siege from hindsight bias, confirmation bias, and motivated reasoning. Preregistration inoculates the researcher against these biases. Without preregistration, and with double use of the data, it is not so much the case that diagnosticity suffers, but that the outcome of the statistical test is rendered utterly meaningless. The 2016 statement by the American Statistical Association says:

“P-values and related analyses should not be reported selectively. Conducting multiple analyses of the data and reporting only those with certain p-values (typically those passing a significance threshold) renders the reported p-values essentially uninterpretable. Cherry-picking promising findings, also known by such terms as data dredging, significance chasing, significance questing, selective inference, and “p-hacking,” leads to a spurious excess of statistically significant results in the published literature and should be vigorously avoided. One need not formally carry out multiple statistical tests for this problem to arise: Whenever a researcher chooses what to present based on statistical results, valid interpretation of those results is severely compromised if the reader is not informed of the choice and its basis. Researchers should disclose the number of hypotheses explored during the study, all data collection decisions, all statistical analyses conducted, and all p-values computed. Valid scientific conclusions based on p-values and related statistics cannot be drawn without at least knowing how many and which analyses were conducted, and how those analyses (including p-values) were selected for reporting.” (Wasserstein & Lazar, 2016, pp. 131-132).

De Groot summarizes the ramifications as follows:

“Prohibitions in statistical methodology really never pertain to the calculations themselves, but pertain only to drawing incorrect conclusions from the outcomes. It is no different here. One “is allowed” to apply statistical tests in exploratory research, just as long as one realizes that they do not have evidential impact. They have also lost their forceful nature, regardless of their outcomes.” (De Groot, 1956/2014)

Of course, methods other than preregistration can prevent double use of the data as well. One such method is blinded analyses (e.g., Dutilh et al., in press); another is the presence of very strong theory; a final antidote is the sort of self-restraint, fairness, and unbiased evaluation that is alien to any member of the human species. In the words of Francis Bacon:

“As for the detection of False Appearances or Idols, Idols are the deepest fallacies of the human mind. For they do not deceive in particulars, as the others do, by clouding and snaring the judgment; but by a corrupt and ill-ordered predisposition of mind, which as it were perverts and infects all the anticipations of the intellect. For the mind of man (dimmed and clouded as it is by the covering of the body), far from being a smooth, clear, and equal glass (wherein the beams of things reflect according to their true incidence), is rather like an enchanted glass, full of superstition and imposture.”

“Fixing science with statistics”

I view preregistration as one possible crutch (there are others) that fixes a small but essential part of the complete statistical inference process. Statistical inference, in turn, is a crutch that helps science progress efficiently. So preregistration is a second-order improvement, a crutch for a crutch, that prevents human bias from polluting the result. This harks back to The First Agreement mentioned above: preregistration should not be glorified. Where we may disagree is that preregistration is nevertheless useful, in that it combats human biases that would otherwise pollute the reported result. The immediate aim of preregistration is to help fix statistics, and this fix will indirectly benefit science, since science is facilitated by a fair statistical assessment.

“Scientific progress consists of iterative attempts to improve theories by careful attempts to find and correct errors in them [2,3].”

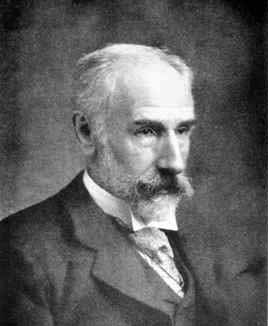

Not all scientific progress is of this nature. My favorite definition of science is by Karl Pearson, and it is very general (you can find it here). Most importantly, one part of scientific progress is to determine reliable empirical phenomena that may subsequently need to be explained or modeled.

“One useful technique for this process is to describe theories mathematically.”

The Second Agreement: Mathematical modeling is exceptionally useful. Given that both the authors and I are mathematical psychologists, this agreement is not surprising. Of course, mathematical modeling should not be glorified either. The mere fact that a mathematical model has been applied does not necessarily make the work excellent — for instance, the model can be poor, or the phenomena that the model accounts for may be spurious.

“In the social and behavioural sciences, such mathematical formalisms are typically realized by statistical models. Yet the extent to which such models are useful depends entirely on how accurate the match between the theory and model is (see Box 1). Because the match between theories and statistical models is generally poor in psychological science, scientific conclusions based on statistical inference are often weak [4,5].”

This immediately brings us to The Third Agreement: Popular statistical models are often poor reflections of psychological theory.

“Solving statistical problems with preregistration does not compensate for weak theory. Imagine making a random prediction regarding the outcome of an experiment. Should we observe the predicted outcome, we would not regard this “theory” as useful for making subsequent predictions. Why should we regard it as better if it was preregistered? Researchers can preregister bad theories, the predictions of which are no better than randomly picking an outcome, but which can nonetheless bear out in experiments.”

And here we have The Fourth Agreement: Preregistration does not compensate (fully) for weak theory.

“On the other hand, unlike statistical analyses, there is nothing inherently problematic about post-hoc scientific inference when theories are strong. The crucial difference is that strong scientific inference requires that post-hoc explanations are tested just as rigorously as ones generated before an experiment — for example, by a collection of post-hoc tests that evaluate the many regularities implied by a novel theory [6]. There is no reason not to take such post-hoc theories seriously just because they were thought of after or were not preregistered before an experiment was conducted.”

There are two ways to interpret this fragment. The first interpretation is that temporal order is irrelevant: a theory can predict findings that have already been collected; the key is that the data have not inspired the construction of the theory. So:

The Fifth Agreement: The data-model temporal order is irrelevant. [A theory can gain credence when it predicts data that have already been collected, just as long as these data were not used to develop and constrain the theory in the first place.]

There is a second interpretation of this fragment, and that one does strike me as problematic. In this interpretation, double use of the data is acceptable, and the claim is that it should not matter whether a model predicted data or accounted for these data post-hoc. By definition, a post-hoc explanation has not been “rigorously tested” – the explanation was provided by the data, and a fair test is no longer possible. Now there is one (and I believe only one) statistical approach in which the authors are formally correct, and that is subjective Bayesianism. Suppose that a subjective Bayesian has assigned prior probability across all models and hypotheses that could possibly be entertained. This Bayesian then updates this near-infinite hypothesis space when data come in, and interprets the resulting posterior distribution across that space. There is no need for multiplicity corrections, nor for preregistration. Unfortunately, this scenario is completely unrealistic, not only because people cannot assign prior probability across many hypotheses, but also because they will be unable to withstand the temptation to adjust their assessment of prior plausibility post-hoc, on the basis of the observed data. The power of hindsight affords models that happen to fit the data well with excess plausibility. But even if an unbiased evaluation were possible, the subjective Bayesian will also be confronted with data from other researchers, and these may be less than pristine – perhaps certain conditions or participants went AWOL, perhaps entire studies were demoted to pilot experiments, perhaps a range of models were tried and discarded, etc. How is the subjective Bayesian supposed to update beliefs when the input is only partially observed, and has been selected to fit a particular narrative? This is just hopeless, in my opinion. So:

The Sixth (Partial) Agreement: In theory, a subjective Bayesian robot, confronted with perfectly unbiased data, has no need for preregistration. [Reality, however, is very different.]

“Preregistration as a nudge?

Although preregistration does not require the improvement of theories, many argue that it at least nudges researchers to think more deeply about how to improve their theories. Though this might sometimes be so, there is no clear explanation for why we should expect it to happen.”

For me, this property of preregistration is only a possible side-effect. Inoculating researchers against inevitable biases is the main goal.

“One possible explanation is that researchers are motivated to improve their theories should they encounter problems when preregistering a study or when preregistered predictions are not observed. The problem with this line of argument is that any improvement depends upon a good understanding of how to improve a theory, and preregistration provides no such understanding. In other words, although preregistration may prompt more thinking, it is not clear how it provides a path towards better thinking. The danger is that, without this understanding, preregistration may be perceived as the solution.”

The Seventh Agreement: Preregistration alone does not provide theoretical understanding. This harks back to some of the earlier agreements.

“Instead, better understanding of theory development requires thoughtful discussion and debate about what constitutes good scientific inference. For example, what are the best examples of good theories both within and outside of the social and behavioural sciences [7]? What are characteristics of good theories [6]? How do we improve the link between psychological theory, measurement, methodology, and statistics? The answers to these questions are unlikely to come from nudging researchers with preregistration or some other method-oriented solution. They are likely to come from scientific problem-solving: generating, exchanging and criticizing possible answers, and improving them when needed.”

The Eighth Agreement: Theory development is crucial, and preregistration does not directly contribute to this. Of course, it is also crucial to build theories on a reliable empirical foundation, and here it is essential to combat human biases, for instance by preregistration.

“Preregistration is redundant, and potentially harmful”

It is true that preregistration does not contribute, by itself, to theory development. But that is not its stated goal. According to one interpretation of their work, the authors implicitly put forward the subjective Bayesian stance that post-hoc explanations are just as good of a test as an assessment of predictions. This stance works in a Platonic world inhabited by robots and saints, but not in the world that we live in. I do not believe, therefore, that the authors’ sweeping claim is supported by their arguments.

“Preregistration can be hard, but it can also be easy. The hard work associated with good theorizing is independent of the act of preregistration. What matters is the strength of the theory upon which scientific inference rests. Preregistration does not require that the underlying theory be strong, nor does it discriminate between experiments based on strong or weak theory.”

But preregistration does inoculate researchers against unwittingly biasing their results in the direction of their pet hypotheses. This is the goal of preregistration; a compelling critique of preregistration needs to say something about that main goal, or how it could otherwise be achieved.

“Taking preregistration as a measure of scientific excellence can be harmful, because bad theories, methods, and analyses can also be preregistered.”

According to this line of reasoning, almost any reasonable scientific method can be harmful. For instance, “Taking counterbalancing as a measure of scientific excellence can be harmful, because bad experiments can also be counterbalanced,” or “Developing mathematical process models as a measure of scientific excellence can be harmful, because the models may be poor.”

“Requiring or rewarding the act of preregistration is not worthwhile when its presumed benefits can be achieved without it just as well.”

But can they? The main benefit is that researchers are protected from biasing the results. How exactly can this be accomplished “just as well” with other methods, and which methods are these? I suspect that the author’s answer is “strong theory”. In response, I’d like to note that blinded analyses –one way to protect researchers against bias from double-use of the data– are commonly used in astrophysics (see the references in Dutilh et al., in press, and here). I am not sure that psychological theories will ever rise to the level of those used in astrophysics, but even when they do, we will still need to come to grips with the fact that research is done by humans, not robots.

“As a field, we have to grapple with the difficult challenge of improving our ability to test theories, and so should be wary of any ‘catch-all’ solutions like preregistration.”

The Ninth Agreement: We should be wary of catch-all solutions. [Of course, as researchers we should be wary of just about anything. And there are certainly good alternatives to preregistration, such as multiverse analyses, multi-analysts projects, and blinded analyses.]

“Box 1: Scientific vs statistical inference”

Because statistical inference is so often used to inform scientific inference, it is easy to conflate the two. However, they have fundamentally different aims.”

Harold Jeffreys disagrees, and I agree with Harold Jeffreys. As the (implicit) argument against preregistration is based on subjective Bayesianism, I am not sure how the authors reconcile that with the present dichotomy. But this is a minor point.

“Scientific inference is the process of developing better theories.”

This is not, I believe, what “inference” means. Online I find the definition “a conclusion reached on the basis of evidence and reasoning”. The act of developing better theories is just one part of the scientific learning cycle, but by no means the complete package. One of my recent papers on this is here. I do agree that, in the process of developing better theories, preregistration may not have a direct benefit (see The Eighth Agreement above).

“One aspect of theory development is to test whether the implications of a theory are observed in experiments. We can use statistics to help decide whether such implications are realized. Used in this way, statistical inference is the reliance on (strong, and false) mathematical assumptions to simplify a problem to permit scientific inference.”

Testing whether the implications of a theory are observed in experiments is not, in my opinion, part of the process of model development – it is part of the process of model testing.

“Some problems arising from statistical assumptions, such as family-wise error rates, only exist when hypotheses and statistical comparisons are effectively chosen at random. When statistical inference is used in scientific argument, statistical methods are just tools to test implications derived from theory. Therefore, such statistical problems become irrelevant because theories, not random selection, dictate what comparisons are necessary [8].”

It would certainly be nice if psychology was in a position where its theories were strong enough such that they would dictate, uniquely, the comparisons that are necessary. But this is not the current state of the field, and neither is it the current state of the field in astrophysics. So:

Tenth Agreement: Constraints from theories and mathematical models can help reduce experimenter bias. [but in the real world we are nowhere near the scenario where we can stop worrying about bias.]

“Problems in scientific inference, on the other hand, are important when the overall goal is theory development. Issues that prevent criticism of theory, such as poor operationalization, imprecise measurement, and weak connection between theory and statistical methods, need our attention instead of problems with statistical inference.”

Responsible scientific practice is not a zero-sum game. One of the main factors that currently shields theories and models from criticism is biased statistical inference. I will end this extensive review with a challenge to the authors: can they identify a theory in psychology that uniquely determines the comparisons that are necessary, leaving no room for alternative analyses? This is really a rhetorical challenge, because I do not believe it can be met. If astrophysicists desire a firewall between the data and the analyst, the idea that we as psychologists can simply use the constraints provided by our “strong” theories is hubris.

Conclusion

I agree with the authors on ten key points:

- Preregistration should not be glorified.

- Mathematical modeling can be exceptionally useful.

- Popular statistical models are often poor reflections of psychological theory.

- Preregistration does not compensate for weak theory.

- The data-model temporal order is irrelevant.

- A subjective Bayesian robot, confronted with perfectly unbiased data, has no need for preregistration.

- Preregistration alone does not provide theoretical understanding.

- Theory development is crucial, and preregistration does not directly contribute to this.

- We should be wary of catch-all solutions.

- Constraints from theories and mathematical models can help reduce experimenter bias.

However, these statements do not entail that “preregistration is redundant, at best”. Yes, it is wonderful whenever we find ourselves in the privileged position to have theory strong enough to outline a clear mapping to the statistical test. This mapping will reduce (but never eliminate!) the influence of human bias. However, the large majority of psychology does not have theory this strong. In the absence of strong theory, how can we ensure we are not fooling ourselves and our peers? How can we ensure that the empirical input that serves as the foundation for theory development is reliable, and we are not building a modeling palace on quicksand? Preregistration is not a silver bullet, but it does help researchers establish more effectively whether or not their empirical findings are sound.

The authors have a standing invitation to respond on this blog.

References

De Groot, A. D. (1956/2014). The meaning of “significance” for different types of research. Translated and annotated by Eric-Jan Wagenmakers, Denny Borsboom, Josine Verhagen, Rogier Kievit, Marjan Bakker, Angelique Cramer, Dora Matzke, Don Mellenbergh, and Han L. J. van der Maas. Acta Psychologica, 148, 188-194.

Dutilh, G., Sarafoglou, A., & Wagenmakers, E.-J. (in press). Flexible yet fair: Blinding analyses in experimental psychology. Synthese.

Wagenmakers, E.-J., Dutilh, G., & Sarafoglou, A. (2018). The creativity-verification cycle in psychological science: New methods to combat old idols. Perspectives on Psychological Science, 13, 418-427. Open Access: https://journals.sagepub.com/doi/10.1177/1745691618771357

Wasserstein, R. L., & Lazar, N. A. (2016). The ASA’s statement on p-values: Context, process, and purpose. The American Statistician, 70, 129-133.

About The Author

Eric-Jan Wagenmakers

Eric-Jan (EJ) Wagenmakers is professor at the Psychological Methods Group at the University of Amsterdam.